Chapter 31: Performance

This chapter is concerned with performance, that is, the time taken to perform a computation, and how to improve it.There is one golden rule for achieving good performance in a J program. The rule is to try to apply verbs to as much data as possible at any one time. In other words, try to give to a verb arguments which are not scalars but vectors or, in general, arrays, so as to take maximum advantage of the fact that the built-in functions can take array arguments.

The rest of this chapter consists mostly of harping on this single point.

31.1 Measuring the Time Taken

There is a built-in verb 6!:2 . It takes as argument an expression (as a string) and returns the time (in seconds) to execute the expression. For example, given :mat =: ? 20 20 $ 100x NB. a random matrixThe time in seconds to invert the matrix is given by:

6!:2 '%. mat' 1.92122If we time the same expression again, we see:

6!:2 '%. mat' 1.82563Evidently there is some uncertainty in this measurement. Averaging over several measurements is offered by the dyadic case of 6!:2 . However, for present purposes we will use monadic 6!:2 to give a rough and ready but adequate measurement.

31.2 The Performance Monitor

As well as 6!:2, there is another useful instrument for measuring execution times, called the Performance Monitor. It shows how much time is spent in each line of, say, an explicit verb.Here is an example with a main program and an auxiliary function. We are not interested in what it does, only in how it spends its time doing it..

main =: 3 : 0

m =. ? 10 10 $ 100x NB. random matrix

u =. =/ ~ i. 10 NB. unit matrix

t =. matinv m NB. inverted

p =. m +/ . * t

'OK'

)

matinv =: 3 : 0

assert. 2 = # $ y NB. check y is square

assert. =/ $ y

%. y

)

We start the monitor:

load 'jpm' start_jpm_ '' 357142and then enter the expression to be analyzed

main 0 NB. expression to be analyzed OKTo view the reports available: firstly , the main function:

showdetail_jpm_ 'main' NB. display measurements Time (seconds) +--------+--------+---+-----------------+ |all |here |rep|main | +--------+--------+---+-----------------+ |0.000007|0.000007|1 |monad | |0.000132|0.000132|1 |[0] m=.?10 10$100| |0.000019|0.000019|1 |[1] u=.=/~i.10 | |0.082856|0.000010|1 |[2] t=.matinv m | |0.123787|0.123787|1 |[3] p=.m+/ .*t | |0.000034|0.000034|1 |[4] 'OK' | |0.206836|0.123989|1 |total monad | +--------+--------+---+-----------------+and we may wish to look at the auxiliary function:

showdetail_jpm_ 'matinv' Time (seconds) +--------+--------+---+-----------------+ |all |here |rep|matinv | +--------+--------+---+-----------------+ |0.000006|0.000006|1 |monad | |0.000008|0.000008|1 |[0] assert. 2=#$y| |0.000039|0.000039|1 |[1] assert. =/$y | |0.082794|0.082794|1 |[2] %.y | |0.082846|0.082846|1 |total monad | +--------+--------+---+-----------------+Evidently, main spends most of its time executing lines 2 and 3 . Notice that the time under "all" of line 2 is near enough equal to the time for line 2 "here", (that is, in main ) plus the time for "total" of matinv

31.3 The Golden Rule: Example 1

Here is an example of a function which is clearly intended to take a scalar argument.

collatz =: 3 : 'if. odd y do. 1 + 3 * y else. halve y end.'

odd =: 2 & |

halve =: -:

With a vector agument it gives the wrong results

collatz 2 3 4 5 6 7 8 9 1 1.5 2 2.5 3 3.5 4 4.5So we need to specify the rank to force the argument to be scalar

(collatz "0) 2 3 4 5 6 7 8 9 1 10 2 16 3 22 4 28This is an opportunity for the Golden Rule, so here is a version designed for a vector argument:

veco =: 3 : '(c*1+3*y) + (halve y) * (1-c =. odd y)'The results are the same:

data =: 1 + i. 10000 (collatz"0 data) -: (veco data) 1but the vector version is about a hundred times faster:

t1 =: 6!:2 e1 =: 'collatz"0 data ' t2 =: 6!:2 e2 =: 'veco data ' 2 2 $ e1 ; t1; e2;t2 +---------------+-----------+ |collatz"0 data |0.0647517 | +---------------+-----------+ |veco data |0.000656927| +---------------+-----------+

31.4 Golden Rule Example 2: Conway's "Life"

J. H. Conway's celebrated "Game of Life" needs no introduction. There is a version in J at Rosetta Code, reproduced here:

pad=: 0,0,~0,.0,.~]

life=: (_3 _3 (+/ e. 3+0,4&{)@,;._3 ])@pad

To provide a starting pattern, here is a function rp which generates an r-pentomino

in a y-by-y boolean matrix.

rp =: 3 : '4 4 |. 1 (0 1; 0 2; 1 0; 1 1; 2 1) } (y,y) $ 0' ] M =: rp 8 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 1 0 0 0 0 0 1 1 0 0 0 0 0 0 0 1 0 0 0 0 0 0 0 0 0 0 life M 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 1 1 0 0 0 0 0 1 0 0 0 0 0 0 0 1 1 0 0 0 0 0 0 0 0 0 0We notice that the life verb contains ;._3 - it computes the count of neighbours of each cell separately, by working on the 3-by-3 neighbourhood of that cell.

By contrast here is a version which computes all the neighbours-counts at once, by shifting the whole plane to align each cell with its neighbours.

sh =: |. !. 0 E =: 0 _1 & sh W =: 0 1 & sh N =: 1 & sh S =: _1 & sh NS =: N + S EW =: E + W NeCo =: NS + (+ NS) @: EW NB. neighbour-count evol =: ((3 = ]) +. ([ *. 2 = ])) NeCoThe last line expresses the condition that (neighbour-count is 3) or ("alive" and count is 2). The shifting method evol, and the Rosetta method life give the same result

(life M) -: (evol M) 1However, the shifting method is faster:

G =: rp 200 NB. a 200-by-200 grid

t3 =: 6!:2 e3 =: 'r3 =: life ^: 100 G' NB. 100 iterations, Rosetta

t4 =: 6!:2 e4 =: 'r4 =: evol ^: 100 G' NB. and shifting method

2 2 $ e3;t3;e4;t4

+-------------------+---------+

|r3 =: life ^: 100 G|14.7024 |

+-------------------+---------+

|r4 =: evol ^: 100 G|0.0960126|

+-------------------+---------+

Checking for correctness again:

r3 -: r4 1

31.5 Golden Rule Example 3: Join of Relations

31.5.1 Preliminaries

Recall from Chapter 18 the author-title and title-subject relations. We will need test-data in the form of these relations in various sizes. It is useful to define a verb to generate test-data from random integers. (Integers are adequate as substitutes for symbols for present purposes.) The argument y is the number of different titles required.

maketestdata =: 3 : 0

T =. i. y NB. titles domain

A =. i. <. 4 * y % 5 NB. authors domain

S =. i. <. y % 2 NB. subjects domain

AT =. (? (#T) $ # A) ,. (? (#T) $ #T) NB. AT relation

TS =. (? (#T) $ # T) ,. (? (#T) $ #S) NB. TS relation

AT;TS

)

'AT1 TS1' =: maketestdata 8 NB. small test-data

'AT2 TS2' =: maketestdata 1000 NB. medium

'AT3 TS3' =: maketestdata 10000 NB. large

31.5.2 First Method

Recall also from Chapter 18 a verb for the join of relations, which we will take as a starting-point for further comparisons. We can call this the "first method".

VPAIR =: 2 : 0

:

z =. 0 0 $ ''

for_at. x do. z=.z , |: v (#~"1 u) |: at , "1 y end.

~. z

)

first =: (1&{ = 2&{) VPAIR (0 3 & {)

| AT1 | TS1 | AT1 first TS1 |

| 3 4 1 6 0 1 5 5 2 1 2 1 5 4 2 7 |

4 2 5 0 5 0 2 3 2 2 5 1 6 2 2 1 |

3 2 1 2 5 0 5 1 5 2 |

31.5.3 Second Method: Boolean Matrix

Here is another method. It computes a boolean matrix of equality on the titles. Row i column j is true where the title in i{AT equals the title in j{TS . The authors and titles are recovered by by converting the boolean matrix to sparse representation, then taking its index-matrix.

second =: 4 : 0

'a t' =. |: x

'tt s' =. |: y

bm =. t =/ tt NB. boolean matrix of matches

sm =. $. bm NB. convert to sparse

im =: 4 $. sm NB. index-matrix

'i j' =. |: im

(i { a),. (j { s)

)

Now to check the second method for correctness, that is,

giving the same results

as the first. We don't care about ordering, and we don't care about

repetitions, so

let us say that two relations are the same

iff their sorted nubs match.

same =: 4 : '(~. x /: x) -: (~. y /: y) ' (AT2 second TS2) same (AT2 first TS2) 1Now for some times

t1 =: 6!:2 'AT2 first TS2' t2 =: 6!:2 'AT2 second AT2' t3 =: 6!:2 'AT3 first TS3' t4 =: 6!:2 'AT3 second TS3' 3 3 $ ' '; (#AT2) ; (#AT3) ; 'first' ; t1; t3 ; 'second' ; t2; t4 +------+----------+--------+ | |1000 |10000 | +------+----------+--------+ |first |0.070905 |5.9352 | +------+----------+--------+ |second|0.00358509|0.398459| +------+----------+--------+We see that the advantage of the second method is reduced at the larger size, and we can guess this is because the time to compute the boolean matrix is quadratic in the size. We can use the performance monitor to see where the time goes.

require 'jpm'

start_jpm_ ''

357142

z =: AT3 second TS3

showdetail_jpm_ 'second' NB. display measurements

Time (seconds)

+--------+--------+---+----------------+

|all |here |rep|second |

+--------+--------+---+----------------+

|0.000007|0.000007|1 |dyad |

|0.000211|0.000211|1 |[0] 'a t'=.|:x |

|0.000215|0.000215|1 |[1] 'tt s'=.|:y |

|0.242814|0.242814|1 |[2] bm=.t=/tt |

|0.139426|0.139426|1 |[3] sm=.$.bm |

|0.000013|0.000013|1 |[4] im=:4$.sm |

|0.000187|0.000187|1 |[5] 'i j'=.|:im |

|0.009809|0.009809|1 |[6] (i{a),.(j{s)|

|0.392682|0.392682|1 |total dyad |

+--------+--------+---+----------------+

Evidently much of the time went into computing the boolean matrix at line 2.

Can we do better than this?

31.5.4 Third method: boolean matrix with recursive splitting

Here is an attempt to avoid the quadratic time. If the argument is smaller than a certain size, we use the second method above (which is quadratic, but not so bad for smaller arguments).If the argument is larger than a certain size, we split it into two smaller parts, so that there are no titles shared between the two. Then the method is applied recursively to the parts.

By experimenting, the "certain size" appears to be about 256 on my computer.

third =: 4 : 0

if. 0 = # x do. return. 0 2 $ 3 end.

if. 0 = # y do. return. 0 2 $ 3 end.

'a t' =. |: x

'tt s' =. |: y

if. 256 > # x do.

bm =. t =/ tt NB. boolean matrix of matches

sm =. $. bm

im =. 4 $. sm NB. index-matrix

'i j' =. |: im

(i { a),. (j { s)

else.

p =: <. -: (>./t) + (<./t) NB. choose "pivot" title

pv =: t <: p

x1 =. pv # x

x2 =. (-. pv) # x

assert. (#x1) < (#x)

assert. (#x2) < (#x)

qv =. tt <: p

y1 =. qv # y

y2 =. (-. qv) # y

assert. (#y1) < (#y)

assert. (#y2) < (#y)

(x1 third y1) , (x2 third y2)

end.

)

Check correctness :

(AT2 third TS2) same (AT2 second TS2) 1And timings. Experiment on my computer shows the second method will run out of space where the third method will succeed.

'AT4 TS4' =: maketestdata 30000 'AT5 TS5' =: maketestdata 100000 t4a =: 6!:2 'AT4 second TS4' t5 =: 6!:2 'AT2 third TS2' t6 =: 6!:2 'AT3 third TS3' t7 =: 6!:2 'AT4 third TS4' t8 =: 6!:2 'AT5 third TS5' a =: ' '; (#AT2); (#AT3) ; (#AT4); (#AT5) b =: 'second'; t2; t4; t4a; 'limit error' c =: 'third' ; t5; t6; t7 ; t8 3 5 $a,b,c +------+----------+---------+---------+-----------+ | |1000 |10000 |30000 |100000 | +------+----------+---------+---------+-----------+ |second|0.00358509|0.398459 |3.59096 |limit error| +------+----------+---------+---------+-----------+ |third |0.00167228|0.0199273|0.0624012|0.232973 | +------+----------+---------+---------+-----------+In conclusion, the third method is clearly superior but considerably more complex.

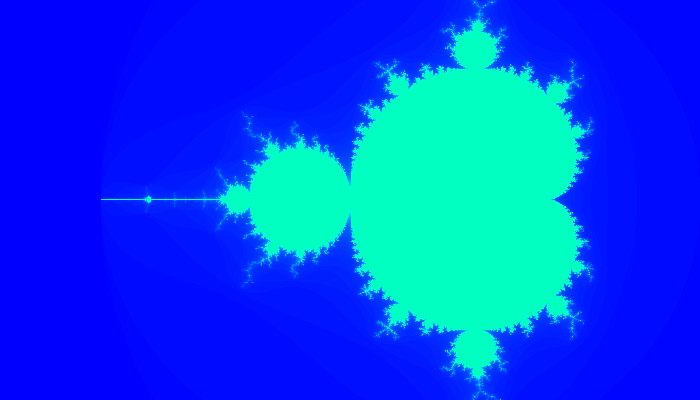

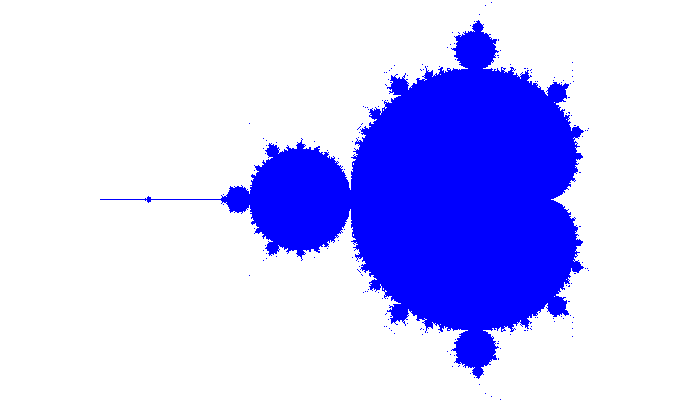

31.6 Golden Rule Example 4: Mandelbrot Set

The Mandelbrot Set is a fractal image needing much computation to produce. In writing the following, I have found to be helpful both the Wikipedia article and the Rosetta Code treatment for the Mandelbrot Set in J:Computation of the image requires, for every pixel in the image, iteration of a single scalar function until a condition is satisfied. Different pixels will require different numbers of iterations. The final result is the array of counts of iterations for each pixel. Hence it may appear that the Mandelbrot Set is an inescapably scalar computation. It is not, as the following is meant to show. The Golden Rule applies.

31.6.1 Scalar Versions

The construction of an image begins with choosing a grid of points on the complex plane, one for each pixel in the image. Here is a verb for conveniently constructing the grid.

makegrid =: 3 : 0

'LL UR delta' =. y

'xmin ymin' =. +. LL

'xmax ymax' =. +. UR

xn =. <. (xmax-xmin) % delta

yn =. <. (ymax-ymin) % delta

(|.(ymin + delta * i. yn)) (j. ~/) (xmin + delta * i. xn)

)

The arguments are the complex numbers for

lower-left and upper-right corners of the image, and a value for the spacing of the points.

To demonstrate with a tiny grid:

makegrid _2j1 4j4 1.0

_2j3 _1j3 0j3 1j3 2j3 3j3

_2j2 _1j2 0j2 1j2 2j2 3j2

_2j1 _1j1 0j1 1j1 2j1 3j1

For an image, a list of arguments more suitable for present purposes is shown by :

GRID =: makegrid _2.5j_1 1j1 0.005The image is computed by applying a Mandelbrot function to each pixel in the grid. Here is a suitable Mandelbrot function. It follows the design outlined in the Wikipedia article,

mfn1 =: 3 : 0

NB. y is one pixel-position

v =. 0j0

n =. 0

while. (2 > | v) *. (n < MAXITER) do.

v =. y + *: v

n =. n+1

end.

n

)

We need to choose a value for MAXITER, the maximum number of iterations.

The higher the maximum, the more complex the resulting image.

For present purposes let us choose a maximum of 64 iterations,

which will give a recognisable image.

MAXITER =: 64 NB. maximum number of iterations image1 =: mfn1 " 0 GRIDThe result image1 is a matrix of integers, which can be mapped to colors and then displayed on-screen with:

require '~addons/graphics/viewmat/viewmat.ijs' viewmat image1 0to produce an image appear looking something like this:

The time to compute the image:

e1 ; t1 =: 6!:2 e1 =: 'image1 =: mfn1 " 0 GRID NB. Wikipedia scalar' +--------------------------------------------+-------+ |image1 =: mfn1 " 0 GRID NB. Wikipedia scalar|43.3338| +--------------------------------------------+-------+The mfn1 function above was designed to show the algorithm for the scalar one-pixel-at-at-a-time method. Regarding its performance, here is some evidence that its performance is reasonable, that is, comparable to the published Rosetta Code version.

The verb mfn2 is adapted from the verb mcf of the Rosetta Code treatment. It differs only by replacing a numerical constant by the parameter MAXITER.

mfn2 =: (<: 2:)@|@(] ((*:@] + [)^:((<: 2:)@|@])^: MAXITER ) 0:)We see that times for mfn1 and mfn2 are not very different:

t2 =: 6!:2 e2 =: 'image2 =: mfn2 "0 GRID NB. Rosetta ' 2 2 $ e1; t1; e2; t2 +--------------------------------------------+-------+ |image1 =: mfn1 " 0 GRID NB. Wikipedia scalar|43.3338| +--------------------------------------------+-------+ |image2 =: mfn2 "0 GRID NB. Rosetta |39.9882| +--------------------------------------------+-------+and the image from the Rosetta code is recognisably similar to that from the Wikipedia design,

viewmat image2 0

31.6.2 Planar Version

Now we look at a version which computes all pixels at once. Here is a first attempt. It is is a straightforward developement of mfn1 but here all the computations for every pixel are allowed to run for the maximumum number of iterations.

mfn3 =: 3 : 0 NB. y is entire grid

N =. ($ y) $ 0

v =. 0j0

for_k. i. MAXITER-1 do.

v =. y + *: v

N =. N + (2 > | v)

end.

1 + N

)

For small values of MAXITER, this is OK.

A quick demonstration, firstly of correctness:

it produces the same result as mfn1.

MAXITER =: 12 (mfn1 " 0 GRID) -: (mfn3 " 2 GRID) 1And it's faster:

t1a =: 6!:2 e1a =: 'mfn1 " 0 GRID NB. Wikip. with MAXITER=12' t3a =: 6!:2 e3a =: 'mfn3 " 2 GRID NB. Planar with MAXITER=12' 2 2 $ e1a ; t1a; e3a; t3a +-----------------------------------------+--------+ |mfn1 " 0 GRID NB. Wikip. with MAXITER=12|17.299 | +-----------------------------------------+--------+ |mfn3 " 2 GRID NB. Planar with MAXITER=12|0.695912| +-----------------------------------------+--------+Unfortunately there is a problem with any larger values of MAXITER. The repeated squaring of the complex numbers in v will ultimately produce, not infinity, but a "NaN Error", caused by subtracting infinities. Observe:

(*: ^: 10) 1j3 NB. this is OK

__j__

(*: ^: 30) 1j3 NB. but this is not

|NaN error

| (*:^:30)1j3

|[-587] c:\users\homer\14\js\31.ijs

MAXITER =: 64

Here is an attempt to avoid the NaN errors. One cycle in every 10,

those values in v which have "escaped", (that is, no longer contribute to

the final result N) are reset to small values to prevent them increasing without limit.

mfn4 =: 3 : 0

N =. ($ y) $ 0

v =. 0j0

for_k. i. MAXITER - 1 do.

if. 0 = 10 | k do.

e =. 2 < | v

v =. (v * 1-e) + (1.5j1.5 * e )

end.

v =. y + *: v

N =. N + 2 > | v

end.

N+1

)

In spite of the burden of resetting,

the timing looks about 8 times faster than the scalar method:

t4 =: 6!:2 e4 =: 'image4 =: mfn4 " 2 GRID NB. Planar, resetting' 2 2 $ e1; t1 ; e4;t4 +---------------------------------------------+-------+ |image1 =: mfn1 " 0 GRID NB. Wikipedia scalar |43.3338| +---------------------------------------------+-------+ |image4 =: mfn4 " 2 GRID NB. Planar, resetting|5.48124| +---------------------------------------------+-------+and we check the result is correct:

image4 -: image1 1Some further improvement is possible. The idea is to avoid computing the magnitudes of complex numbers because this involves computing square roots. Instead of requiring the magnitude to be less than 2, we will require the square of the magnitude to be less than 4. To do this complex numbers will be represented as a pair of reals. The resetting business is simplified.

mfn5 =: 3 : 0

'r0 i0' =. ((2 0 1 & |:) @: +. ) y NB. real and imag planes of y

assert. y -: r0 j. i0

N =. 0

a =. r0

b =. i0

for_i. i. MAXITER-1 do.

p =. *: a

q =. *: b

r =. p+q NB. square of magnitudes

N =. N + r < 4

b =. (i0 + +: a*b) <. 100

a =. (r0 + p - q) <. 100

end.

N+1

)

Timing is improved:

t5 =: 6!:2 e5 =: 'image5 =: mfn5 " 2 GRID NB. no square roots' 3 2 $ e1;t1; e4;t4; e5;t5 +---------------------------------------------+-------+ |image1 =: mfn1 " 0 GRID NB. Wikipedia scalar |43.3338| +---------------------------------------------+-------+ |image4 =: mfn4 " 2 GRID NB. Planar, resetting|5.48124| +---------------------------------------------+-------+ |image5 =: mfn5 " 2 GRID NB. no square roots |3.3594 | +---------------------------------------------+-------+and the result is correct

image5 -: image1

1

31.7 The Special Code of Appendix B of the Dictionary

In Appendix B of the J Dictionary there are listed about 80 different expressions which are given special treatment by the interpreter to improve performance. Many more expressions are listed in the Release NotesAn example is +/ . Notice that the speedup only occurs when +/ itself (as opposed to something equivalent) is recognised.

data =: ? 1e6 $ 1e6 NB. a million random integers plus =: + t20 =: 6!:2 e20 =: 'plus / data' t21 =: 6!:2 e21 =: '+/ data' 2 2 $ e20 ; t20; e21; t21 +-----------+----------+ |plus / data|0.534446 | +-----------+----------+ |+/ data |0.00183724| +-----------+----------+The special expressions can be unmasked with the f. adverb which translates all defined names into the built-in functions.

foo =: plus / t22 =: 6!:2 e22 =: 'foo data' t23 =: 6!:2 e23 =: 'foo f. data' 2 2 $ e22 ; t22; e23; t23 +-----------+----------+ |foo data |0.525956 | +-----------+----------+ |foo f. data|0.00188502| +-----------+----------+The recommendation here is NOT that the programmer should look for opportunities to use these special cases. The recommendation is ONLY to allow the interpreter to find them, by giving, where appropriate, a final little polish to tacit definitions with f. .

This brings us to the end of Chapter 31