A Programming Language

Kenneth E. Iverson

Research Division, IBM Corporation

Yorktown Heights, New York

Abstract

The paper describes a succinct problem-oriented programming language.

The language is broad in scope,

having been developed for,

and applied effectively in,

such diverse areas as microprogramming, switching theory,

operations research, information retrieval, sorting theory,

structure of compilers, search procedures, and language translation.

The language permits a high degree of useful formalism.

It relies heavily on a systematic extension

of a small set of basic operations

to vectors, matrices, and trees,

and on a family of flexible selection operations

controlled by logical vectors.

Illustrations are drawn from a variety of applications.

The programming language outlined

[1]

here has been designed for the succinct description

of algorithms.

The intended range of algorithms is broad;

effective applications include microprogramming,

switching theory, operations research,

information retrieval, sorting theory,

structure of compilers, search procedures,

and language translation.

The symbols used have been chosen

so as to be concise and mnemonic,

and their choice has not been restricted to the character

sets provided in contemporary printers and computers.

A high degree of formalism is provided.

Basic Operations

The language is based on a consistent unification and extension of existing mathematical notations, and upon a systematic extension of a small set of basic arithmetic and logical operations to vectors, matrices, and trees. The arithmetic operations include the four familiar arithmetic operations and the absolute value (denoted by the usual symbols) as well as the floor, ceiling, and residue functions denoted and defined as follows:

|

where ⌊x⌋, ⌈x⌉, m, n, and q are integers.

The logical operations and, or, and not

are defined upon the logical variables 0 and 1 and are

denoted by ∧, ∨, and an overbar.

They are augmented

by the relational statement (proposition)

| sgn x = (x > 0) – (x < 0) |

Vectors and Matrices

A vector is denoted by a bold italic lowercase letter (e.g., x), its i-th component by xi, and its dimension by ν(x). A matrix is denoted by a bold italic uppercase letter (e.g., X), its i-th row by X i, its j-th column by Xj, its ij-th element by Xji, its row dimension (i.e., the common dimension of its row vectors) by ν(X), and its column dimension by μ(X).

All of the basic operations are extended component-by-component to vectors and matrices. Thus,

|

The symbol (n)

will denote a logical vector

of dimension n whose components are all unity,

and

(n)

(n)

| y = (k ↑ x) ↔ yi = xj, |

Reduction

For each basic binary operator  ,

the

,

the  -reduction

-reduction /x

/x

/x =

(…((x1 /x =

(…((x1  x2)

x2)  x3)

x3)  …

…  xν(x)).

xν(x)).

|

= 1,

= 1,Reduction is extended to matrices row-by-row:

z =  /X

↔ zi = /X

↔ zi =

/X i, /X i,

|

z =  //X

↔ zi = //X

↔ zi =

/Xi, /Xi,

|

For example , if u =

. .

|

The reduction operation can be extended

to any relation R

by substituting R

for  in the formal definition above.

The parentheses in the definition

now signify relational statements as well as grouping,

and thus the expression ≠/u

denotes the application of the exclusive-or

over all components of the logical vector u.

For example,

in the formal definition above.

The parentheses in the definition

now signify relational statements as well as grouping,

and thus the expression ≠/u

denotes the application of the exclusive-or

over all components of the logical vector u.

For example,

≠/u = 2|+/u, and

. .

|

,

a useful companion to De Morgan’s law.

,

a useful companion to De Morgan’s law.

Matrix Product

The conventional matrix product AB may be defined as:

| (AB)ji = +/ (Ai × Bj). |

B

BA  B

= B

=  1/ (Ai 1/ (Ai

2

Bj), 2

Bj),

|

1

and

1

and  2

are any operators or relations

with suitable domains.

2

are any operators or relations

with suitable domains.

Thus  X

X

s = M

x x

|

As further examples,

Q

Q Q

Q

De Morgan’s law and the identity

≠/u =

|

. .

|

Selection

The formation of a vector y from a vector x by deleting certain components of x will be denoted by

| y = u/x, |

/x

/x )/x

)/xThe operation u/x is called compression and is extended to matrices by row and by column as follows:

| Z = u/X | ↔ | Z i = u/X i, | |

| Z = u//X | ↔ | Zi = u/Xi. |

X  Y

= ( Y

= ( /X) /X)

( ( //Y) +

(u/X) //Y) +

(u/X)  (u//Y)

(u//Y)

|

u/(X  Y) =

X Y) =

X  (u/Y), (u/Y),

|

u//(X  Y) =

(u//X) Y) =

(u//X)  Y. Y.

|

To illustrate other uses of compression, consider a bank ledger L so arranged that Li represents the i-th account, and L1, L2, and L3 represent the vector of names, of account numbers, and of balances, respectively. Then the preparation of a list P (in the same format) of all accounts having a balance less than 2 dollars is described by the statement:

| P ← (L3 < 2∊)//L, |

Three useful operations converse to compression are defined as follows:

|

\a, u, b\ =

/ \a, u, u\b/ , \a, u, u\b/ ,

| |

/a, u, b/ =

\ /a, u, u/b\ . /a, u, u/b\ .

|

Special Vectors

In addition to the full vector

∊(n) already defined,

it is convenient to define the prefix vector

⍺ j(n)

as a logical vector of dimension n

whose first j components are unity.

The suffix vector

⍵ j(n)

is defined analogously.

An infix vector having i leading 0’s

followed by j 1’s can be denoted by

Mixed Base Value

If the components of the vector y specify the radices of a mixed base number system and if x is any numerical vector of the same dimension, then the value of x in that number system is called the base y value of x, is denoted by y⊥x, and is defined formally by

y⊥x =

w  x, x,

|

The value of x in a decimal system is denoted

by (10∊)⊥x,

and in a binary system by either

(2∊)⊥x, or ⊥x.

Moreover, if y is any

real number, then (y∊)⊥x

denotes the polynomial in y

with coefficients x.

Application to Microprogramming

To illustrate the use of the notation

in describing the operation of a computer,

consider the IBM 7090 with a memory M

of dimension

|

Instruction Fetch of IBM 7090

Figure 1

Step 1 shows the selection of the next instruction from the memory word specified by the instruction counter s and its transfer to the command register c. Step 2 shows the incrementation (reduced modulo 215) of the counter s. The logical function k1(c) of step 3 determines whether the instruction just fetched belongs to the class of instructions which are subject to indirect addressing, the bits c12 and c13 determine whether this particular instruction is to be indexed, and a is therefore set to unity only if indirect addressing is to be performed.

The function k2(c)

determines whether the instruction is indexable.

If k2(c) = 0,

the branch from step 4 to step 7 skips

the potential indexing of steps 5 and 6.

As shown by step 6, indexing proceeds

by oring together the index registers

(i.e., rows of I)

selected by the three tag bits

t =

The fetch terminates immediately from step 7 if no indirect addressing is indicated; otherwise step 8 performs the indirect addressing and the indexing phase is repeated. Step 9 limits the indirect addressing to a single level. It may be noted that all format information is presented directly in the microprogram.

The description of the execution phase of computer operation

will be illustrated by the family of instructions

for logical functions in the 7090.

Representing the 38-bit accumulator

by the logical vector u

|

Instructions in IBM 7090

Figure 2

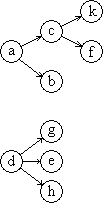

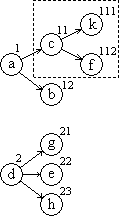

Ordered Trees

A tree can be represented graphically as in Figure 3(a) and, since it is a special case of a directed graph, it can also be represented by a node vector n and a connection matrix C as in Figure 3(b).

|

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Figure 3(a) | Figure 3(b) |

Defining an ordered tree as a tree

in which the set of branches emanating from each node

has a specific ordering,

a simple indexing system for order trees can be defined

in the manner indicated by Figure 4(a).

The dimension of the vector index i

assigned to each node is equal to the number

of the level on which it occurs.

If T is an ordered tree,

then Ti

will denote the subtree rooted in the node

with index i.

Thus T(1,1)

is the subtree enclosed in broken lines in Figure 4(a).

Moreover, Ti

will denote the (unique) path vector

comprising all nodes from the root to node i.

Thus T(1,2) =

|

|

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| T Figure 4(a) | [T Figure 4(b) | ]T Figure 4(c) |

The index vector notation proves very convenient in describing algorithms involving tree operands. Moreover, it provides a simple formalization of the two important respresentations of a tree, the Lukasiewicz representation and the level-by-level list.

If each index vector is augmented by sufficient null components (denoted by 0) to bring all to a common dimension equal to the height of the tree, then they may all be arrayed in an index matrix I as in Figure 4(b). If, as in Figure 4(b), the node vector n is defined such that nj is the value of the node indicated by (the significant part of) the index vector I j, then the matrix obtained by appending n to I provides an unambiguous representation of the tree T. It is convenient to also append the degree vector d such that dj is the degree (i.e., the number of branches emanating from) node I j. The resulting matrix of ν(T)+2 columns is called a full list matrix of T.

Any matrix obtained by interchanging rows of a full list matrix is also a full list matrix and is again an unequivocal representation of the tree. If, as in Figure 4(c), the index vectors are right justified and arranged in increasing order on their value as integers (in a positional number system), the resulting list matrix is called a full right list matrix and is denoted by ]T. If, as in Figure 4(b), the index vectors are left justified and arranged in increasing order as fractions, the list matrix is called a full left list matrix and is denoted by [T.

The right list groups nodes by levels,

and the left list by subtrees.

Moreover, in both cases the degree and node vector together

(that is, ⍺2/(]T)

or ⍺2/([T))

can be shown to provide an unequivocal representation

of the tree without the index matrix.

If,as in the case of a tree representing

a compound statement involving operators of known degree,

the degree vector d

is a known function of the node vector n,

then the tree may be represented by the node vector

n alone.

In the case of the left list,

this leads to the Lukasiewicz[a]

notation for compound statements.

Applications of Tree Notation

The tree notation and the right and left list

matrices are useful in many

areas[b].

These include sorting algorithms

such as the repeated selection sort

[4],

the analysis of compound statements

and their transformation to optimal form

as required in a compiler,

and the construction of an optimal variable-length code

of the Huffman

[5]

prefix type.

The sorting algorithm proceeds level by level

and the right list representation of the tree

is therefore most suitable.

The analysis of a compound statement

proceeds by subtrees and the left list

(i.e., Lukasiewicz) form is therefore appropriate.

The index vectors of the leaves of any tree

clearly form a legitimate Huffman prefix code

and the construction of such a code

proceeds by combining subtrees in a manner

dictated by the frequencies of the characters

to be encoded,

and therefore employs a left list.

However, the characters are finally assigned

in order of decreasing frequency to the leaves

in right list order,

and the index matrix produced

must therefore be brought to right list order.

References

| 1. | Iverson, K.E., “A Programming Language”, Wiley, 1962. | |

| 2. | Lukasiewicz, Jan, Aristotle’s Syllogistic From the Standpoint of Modern Formal Logic, Clarendon Press , Oxford, 1951, p. 78 | |

| 3. | Burks, A.W., D.W. Warren, and J.B. Wright, “An Analysis of a Logical Machine Using Parenthesis-free Notation”, Mathematical Tables and Other Aids to Computation, Vol. VIII (1954), pp 53 - 57 | |

| 4. | Friend, E.H., “Sorting on Electronic Computer Systems”, J. Assoc. Comp. Mach. 3, pp 134 - 168 (March 1956) | |

| 5. | Huffman, D.A., “A Method for the Construction of Minimum Redundancy Codes”, Proc. IRE, Vol. 40 (1952) pp 1098 - 1101 |

Notes

| a. | First introduced by J. Lukasiewicz [2], and first analyzed by Burks et al [3]. | |

| b. | For detailed treatments of the applications mentioned, see Reference 1. |

Originally appeared in the Proceedings of the AFIPS Spring Joint Computer Conference, San Francisco, 1962-05-01 to -03. The notation has been changed to use italic (instead of Roman) lower case for scalars, bold italic (instead of underlined) lower case for vectors, and bold italic (instead of underlined) upper case for matrices. These changes are consistent with usage in [1].

| created: | 2009-12-08 20:15 |

| updated: | 2016-07-29 21:40 |