A Common Language

for Hardware, Software, and Applications

Kenneth E. Iverson

Thomas J. Watson Research Center, IBM

Yorktown Heights, New York

Introduction

Algorithms commonly used in automatic data processing are, when considered in terms of the sequence of individual physical operations actually executed, incredibly complex. Such algorithms are normally made amenable to human comprehension and analysis by expressing them in a more compact and abstract form which suppresses systematic detail. This suppression of detail commonly occurs in several fairly well defined stages, providing a hierarchy of distinct descriptions of the algorithm at different levels of detail. For example, an algorithm expressed in the FORTRAN language may be transformed by a compiler to a machine code description at a greater level of detail which is in turn transformed by the “hardware” of the computer into the detailed algorithm actually executed.

Distinct and independent languages have commonly been developed for the various levels used. For example, the operations and syntax of the FORTRAN language show little semblance to the operations and syntax of the computer code into which it is translated, and neither FORTRAN nor the machine language resemble the circuit diagrams and other descriptors of the processes eventually executed by the machine. There are, nevertheless, compelling reasons for attempting to use a single “universal” language applicable to all levels, or at least a single core language which may be slightly augmented in different ways at the various levels.

First, it is difficult, and perhaps undesirable, to make a precise separation into a small number of levels. For example, the programmer or analyst operating at the highest (least detailed) level, may find it convenient or necessary to revert to a lower level to attain greater efficiency in eventual execution or to employ convenient operations not available at the higher level. Programming languages such as FORTRAN commonly permit the use of lower levels, frequently of a lower level “assembly language” and of the underlying machine language. However, the employment of disparate languages on the various levels clearly complicates their use in this manner.

Second, it is not even possible to make a clear separation of level between the software (metaprograms which transform the higher level algorithms) and the hardware, since the hardware circuits may incorporate permanent or semipermanent memory which determines its action and hence the computer language. If this special memory can itself be changed by the execution of a program, its action may be considered that of software, but if the memory is “read-only” its action is that of hardware—leaving a rather tenuous distinction between software and hardware which is likely to be further blurred in the future.

Finally, in the design of a data processing system it is imperative to maintain close communication between the programmers (i.e., the ultimate users), the software designers, and the hardware designers, not to mention the communication required among the various groups within any one of these levels. In particular, it is desirable to be able to describe the metaprograms of the software and the microprograms of the hardware in a common language accessible to all.

The language presented in

Reference 1

shows promise as a universal language,

and the present paper is devoted to illustrating

its use at a variety of levels,

from microprograms, through metaprograms,

to “applications”

programs in a variety of areas.

To keep the treatment within reasonable bounds,

much of the illustration will be limited to

reference to other published material.

For the same reason the presentation

of the language itself will be

limited to a summary of that portion

required for microprogramming (Table 1),

augmented by brief definitions

of further operations as required.

Microprograms

In the so-called “systems design” of a computer it is perhaps best to describe the computer at a level suited to the machine language programmer. This type of description has been explored in detail for a single machine (the IBM 7090) in Reference 1, and more briefly in Reference 2. Attention will therefore be restricted to problems of description on a more detailed level, to specialized equipment such as associative memory, and to the practical problem of keying and printing microprograms which arise from their use in design automation and simulation.

The need for extending the detail in a microprogram may arise from restrictions on the operations permitted (e.g., logical or and negation, but not and), from restrictions on the data paths provided, and from a need to specify the overall “control” circuits which (by controlling the data paths) determine the sequence in the microprogram, to name but a few. For example, the basic “instruction fetch“ operation of fetching from a memory (i.e., a logical matrix) M the word (i.e., row) M i selected according to the base two value of the instruction location register s (that is, i = ⊥s), and transferring it to the command register c, may be described as

| c ← M⊥s. |

|

a ← s c ← M⊥a. |

),

that every transfer from a register

(or word of memory) x,

to a register (or word of memory)

y must be of the form

),

that every transfer from a register

(or word of memory) x,

to a register (or word of memory)

y must be of the form

| y ← x ∨ y, |

|

In this final form, the successive statements correspond directly (except for the bracketed pair 4 and 5 which together comprise an indivisible operation) to individual register-to-register transfers. Each statement can, in fact, be taken as the “name” of the corresponding set of data gates, and the overall control circuits need only cycle through a set of states which activate the data gates in the sequence indicated.

The sequence indicated in a microprogram such as the above is more restrictive than necessary and certain of the statements (such as 1 and 3 or 6 and 7) could be executed concurrently without altering the overall result. Such overlapping is normally employed to increase the speed of execution of microprograms. The corresponding relaxation of sequence constraints complicates their specification, e.g., execution of statement k might be permitted to begin as soon as statements h, i and j were completed. Senzig (Reference 3) proposes some useful techniques and conventions for this purpose.

The “tag” portion of an associative memory can, as shown in Reference 2, be characterized as a memory M, an argument vector x, and a sense vector s related by the expression

s = M  x

x

|

, ,

|

Because the symbols used in the language

have been chosen for their mnemonic properties

rather than for compatibility with the

character sets of existing keyboards and printers,

transliteration is required in entering

microprograms into a computer,

perhaps for processing by a simulation

or design automation metaprogram.

For that portion of the language

which is required in microprogramming,

Reference 5

provides a simple and mnemonic solution

of the transliteration problem.

It is based upon a two-character representation

of each symbol in which the second character

need be specified but rarely.

Moreover, it provides a simple representation

of the index structure (permitting subscripts

and superscripts to an arbitrary number of levels)

based upon a Lukasiewicz or parenthesis-free

representation of the corresponding tree.

Metaprograms

Just as a microprogram description of a computer (couched at a suitable level) can provide a clear specification of the corresponding computer language, so can a program description of a compiler or other metaprogram give a clear specification of the “macro-language” which it accepts as input. No complete description of a compiler expressed in the present language has been published, but several aspects have been treated. Brooks and Iverson (Chapter 8, Reference 6) treat the SOAP assembler in some detail, particularly the use of the open addressing system and “availability” indicators in the construction and use of symbol tables, and also treat the problem of generators. Reference 1 treats the analysis of compound statements in compilers, including the optimization of a parenthesis-free statement and the translations between parenthesis and parenthesis-free forms. The latter has also been treated (using an emasculated form of the language) by Oettinger (Reference 7) and by Huzino (Reference 8); it will also be used for illustration here.

Consider a vector c representing

a compound statement

in complete[a] parenthesis form

employing operators drawn from a set

p (e.g., p =

|

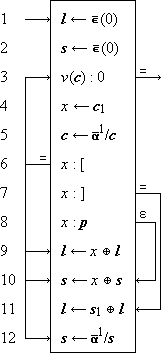

Program 1

Translation from complete parenthesis statement c

to equivalent Lukasiewicz statement l

Program 1 -

The components of c are

examined, and deleted from c,

in order from left to right (steps 4, 5).

According to the decisions on steps 6, 7,

and 8,[b]

each component is discarded if it is a left parenthesis,

appended at the head of the resulting vector

l if it is a variable (step 9),

appended at the head of the auxiliary stack vector

s if it is an operator (step 10),

and initiates a transfer of the leading component

of the stack s to the head

of the result l

if it is a right parenthesis (steps 11, 12).

The behavior is perhaps best appreciated

by tracing the program for a given case,

e.g., if c =

Applications

Areas in which the programming language has been applied include search and sorting procedures, symbolic logic, linear programming, information retrieval, and music theory. The first two are treated extensively in Reference 1, and Reference 9 illustrates the application to linear programming by a 13-step algorithm for the simplex method. The areas of symbolic logic and matrix algebra illustrate particularly the utility of the formalism provided. Salton (Reference 10) has treated some aspects of information retrieval, making particular use of the notation for trees. Kassler’s use in music concerns the analysis of Schoenberg’s 12-tone system of composition (Reference 11).

Three applications will be illustrated here: search procedures, the relations among the canonical forms of symbolic logic, and matrix inversion.

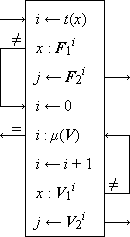

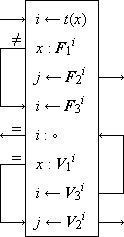

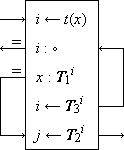

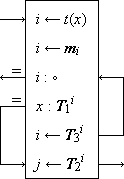

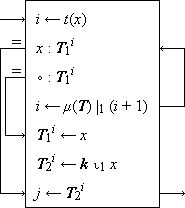

Search Algorithms. Figure 1 shows search programs and examples (taken from Reference 1) for five methods of “hash addressing” (cf. Peterson, Reference 12), wherein the functional correspondent of an argument x is determined by using some key transformation function t which maps each argument x into an integer i in the range of the indices of some table (i.e., matrix) which contains the arguments and their correspondents. The key transformation t is, in general, a many-to-one function and the index i is merely used as the starting point in searching the table for the given argument x. Figure 1 is otherwise self-explanatory. Method (e) is the widely used open addressing system described by Peterson (Reference 12).

Symbolic Logic.

If x is a logical vector

and T is a logical matrix of dimension

Expansion of the expression p =

x

x

| f(x) | = γ(f,∨)

p p | |

= γ(f,∨)

(T (T

x), x),

|

i(f) = γ(f,∨)

(T (T

), ),

| (1) |

denotes the transpose of

denotes the transpose of  .

It is easily shown that

.

It is easily shown that

In a similar manner, the expression for the exclusive disjunctive canonical form may be written (Reference 1, Chapter 7) as

i(f) = γ(f,≠)

(T (T

), ),

| (2) |

.

.Matrix Inversion. The method of matrix inversion using Gauss-Jordan (complete) elimination with pivoting and restricting the total major storage to a single square matrix augmented by one column (described in Reference 14 and 15) involves enough selection, permutation, and decision type operations to render its complete description by classical matrix notation rather awkward. Program 2 describes the entire process. The starred statements perform the pivoting operations and their omission leaves a valid program without pivoting; exegesis of the program will first be limited to the abbreviated program without pivoting.

|

Program 2

Matrix inversion by Gauss-Jordan elimination

Program 2 - Step 1 initializes the counter

k which limits (step 11) the number of

iterations and step 3 appends to the given

square matrix M

a final column of the form

The net result of step 9 is to bring the

next pivot row to first position and the next

pivot element to first position within it by

(1) cycling the old pivot row to last place

and the remaining rows up by one place, and

(2) cycling the leading column

1

1

M

M

The pivoting provided by steps 2, 4, 5, 6,

10, and 13 proceeds as follows.

Step 4 determines the index j of the next pivot row by

selecting the maximum[e]

over the magnitudes of the first k elements of the first column,

where

A description in the ALGOL language of

matrix inversion by the Gauss-Jordan method is provided in

Reference 16.

References

| 1. | Iverson, K.E., A Programming Language, Wiley, 1962. | |

| 2. | Iverson, K.E., “A Programming Language”, Spring Joint Computer Conference, San Francisco, May 1962. | |

| 3. | Senzig, D.N., “Suggested Timing Notation for the Iverson Notation”, Research Note NC-120, IBM Corporation. | |

| 4. | Falkoff, A.D., “Algorithms for Parallel-Search Memories”, J.A.C.M., October 1962. | |

| 5. | Iverson, K.E., “A Transliteration Scheme for the Keying and Printing of Microprograms”, Research Note NC-79, IBM Corporation. | |

| 6. | Brooks, F.P., Jr., and Iverson, K.E., “Automatic Data Processing”, Wiley (in press). | |

| 7. | Oettinger, A.G., “Automatic Syntactic Analysis of the Pushdown Store”, Proceedings of the Twelfth Symposium in Applied Mathematics, April 1960, published by American Mathematical Society, 1961. | |

| 8. | Huzino, S., “On Some Applications of the Pushdown Store Technique”, Memoirs of the Faculty of Science, Kyushu University, Ser. A, Vol. XV, No. 1, 1961. | |

| 9. | Iverson, K.E., “The Description of Finite Sequential Processes”, Fourth London Symposium on Information Theory, August 1960, Colin Cherry, Ed., Butterworth and Company. | |

| 10. | Salton, G.A., “Manipulation of Trees in Information Retrieval”, Communications of the ACM, February 1961, pp. 103-114. | |

| 11. | Kassler, M., “Decision of a Musical System”, Research Summary, Communications of the ACM, April 1962, page 223. | |

| 12. | Peterson, W.W., “Addressing for Random Access Storage”, IBM Journal of Research and Development, Vol. 1, 1957, pp. 130-146. | |

| 13. | Muller, D.E., “Application of Boolean Algebra to Switching Circuit Design and to Error Detection”, Transactions of the IRE, Vol. EC-3, 1954, pp. 6-12. | |

| 14. | Iverson, K.E., “Machine Solutions of Linear Differential Equations”, Doctoral Thesis, Harvard University, 1954. | |

| 15. | Rutishauser, H., “Zur Matrizeninversion nach Gauss-Jordan”, Zeitschrift für Angewandte Mathematik und Physik, Vol. X, 1959, pp. 281-291. | |

| 16. | Cohen, D., “Algorithm 58, Matrix Inversion”, Communications of the ACM, May 1961, p. 236. |

Notes

Figure 1.

Programs and examples for methods of scanning

equivalence classes defined by a 1-origin key transformation t

| k | = | (Sunday, Monday, Tuesday, Wednesday, Thursday, Friday, Saturday) |

| t(ki) | = | 1 + (6 |0 ni), where |

| n | = | (19, 13, 20, 23, 20, 6, 19) (ni is the rank in the alphabet of the first letter of ki) |

| z | = | (2, 2, 3, 6, 3, 1, 2), where zi = t(ki) |

| d | = | (1, 2, 3, 6), and s = 6. |

Data of examples

|

|

(a) Overflow

|

|

(b) Overflow with chaining

|

|

(c) Single table with chaining

|

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

(d) Single table with chaining and mapping vector

|

|

(e) Open addressing system-construction and use of table

y

y

(m × n) = zero matrix

(m × n) = zero matrix y

y /z = x and u/z = y

(Selected in

/z = x and u/z = y

(Selected in /a = (4, 6),

⍺3/x = (x0, x1, x2)

/a = (4, 6),

⍺3/x = (x0, x1, x2)

U

U /x

/x X

X Y is ordinary matrix product,

Y is ordinary matrix product,