|

To My Many Teachers

Preface

Applied mathematics is largely concerned with the design and analysis of explicit procedures for calculating the exact or approximate values of various functions. Such explicit procedures are called algorithms or programs. Because an effective notation for the description of programs exhibits considerable syntactic structure, it is called a programming language.

Much of applied mathematics, particularly the more recent computer-related areas which cut across the older disciplines, suffers from the lack of an adequate programming language. It is the central thesis of this book that the descriptive and analytic power of an adequate programming language amply repays the considerable effort required for its mastery. This thesis is developed by first presenting the entire language and then applying it in later chapters to several major topics.

The areas of application are chosen primarily for their intrinsic interest and lack of previous treatment, but they are also designed to illustrate the universality and other facets of the language. For example, the microprogramming of Chapter 2 illustrates the divisibility of the language, i.e., the ability to treat a restricted area using only a small portion of the complete language. Chapter 6 (Sorting) shows its capacity to compass a relatively complex and detailed topic in a short space. Chapter 7 (The Logical Calculus) emphasizes the formal manipulability of the language and its utility in theoretical work.

The material was developed largely in a graduate course given for several years at Harvard and in a later course presented repeatedly at the IBM Systems Research Institute in New York. It should prove suitable for a two-semester course at the senior or graduate level. Although for certain audiences an initial presentation of the entire language may be appropriate, I have found it helpful to motivate the development by presenting the minimum notation required for a given topic, proceeding to its treatment (e.g., microprogramming), and then returning to further notation. The 130-odd problems not only provide the necessary finger exercises but also develop results of general interest.

Chapter 1 or some part of it is prerequisite to each of the remaining “applications” chapters, but the applications chapters are virtually independent of one another. A complete appreciation of search techniques (Chapter 4) does, however, require a knowledge of methods of representation (Chapter 3). The cross references which do occur in the applications chapters are either nonessential or are specific to a given figure, table, or program. The entire language presented in Chapter 1 is summarized for reference at the end of the book.

In any work spanning several years it is impossible to acknowledge adequately the many contributions made by others. Two major acknowledgments are in order: the first to Professor Howard Aiken, Director Emeritus of the Harvard Computation Laboratory, and the second to Dr. F.P. Brooks, Jr. now of IBM.

It was Professor Aiken who first guided me into this work and who provided support and encouragement in the early years when it mattered. The unusually large contribution by Dr. Brooks arose as follows. Several chapters of the present work were originally prepared for inclusion in a joint work which eventually passed the bounds of a single book and evolved into our joint Automatic Data Processing and the present volume. Before the split, several drafts of these chapters had received careful review at the hands of Dr. Brooks, reviews which contributed many valuable ideas on organization, presentation, and direction of investigation, as well as numerous specific suggestions.

The contributions of the 200-odd students who suffered through the development of the material must perforce be acknowledged collectively, as must the contributions of many of my colleagues at the Harvard Computation Laboratory. To Professor G.A. Salton and Dr. W.L. Eastman, I am indebted for careful reading of drafts of various sections and for comments arising from their use of some of the material in courses. Dr. Eastman, in particular, exorcised many subtle errors from the sorting programs of Chapter 6. To Professor A.G. Oettinger and his students I am indebted for many helpful discussions arising out of his early use of the notation. My debt to Professor R.L. Ashenhurst, now of the University of Chicago, is apparent from the references to his early (and unfortunately unpublished) work in sorting.

Of my colleagues at the IBM Research Center, Messrs. L.R. Johnson and A.D. Falkoff, and Dr. H. Hellerman have, through their own use of the notation, contributed many helpful suggestions. I am particularly indebted to L.R. Johnson for many fruitful discussions on the applications of trees, and for his unfailing support.

On the technical side, I have enjoyed the assistance of unusually competent typists and draughtsmen, chief among them being Mrs. Arthur Aulenback, Mrs. Philip J. Seaward, Jr., Mrs. Paul Bushek, Miss J.L. Hegeman, and Messrs. William Minty and Robert Burns. Miss Jacquelin Sanborn provided much early and continuing guidance in matters of style, format, and typography. I am indebted to my wife for assistance in preparing the final draft.

| Kenneth E. Iverson |

| May, 1962 |

| Mount Kisco, New York |

Chapter 1 The Language

1.1 Introduction

Applied mathematics is concerned with the design and analysis of algorithms or programs. The systematic treatment of complex algorithms requires a suitable programming language for their description, and such a programming language should be concise, precise, consistent over a wide area of application, mnemonic, and economical of symbols; it should exhibit clearly the constraints on the sequence in which operations are performed; and it should permit the description of a process to be independent of the particular representation chosen for the data.

Existing languages prove unsuitable for a variety of reasons. Computer coding specifies sequence constraints adequately and is also comprehensive, since the logical functions provided by the branch instructions can, in principle, be employed to synthesize any finite algorithm. However, the set of basic operations provided is not, in general, directly suited to the execution of commonly needed processes, and the numeric symbols used for variables have little mnemonic value. Moreover, the description provided by computer coding depends directly on the particular representation chosen for the data, and it therefore cannot serve as a description of the algorithm per se.

Ordinary English lacks both precision and conciseness. The widely used Goldstine-von Neumann (1947) flowcharting provides the conciseness necessary to an over-all view of the processes, only at the cost of suppressing essential detail. The so-called pseudo-English used as a basis for certain automatic programming systems suffers from the same defect. Moreover, the potential mnemonic advantage in substituting familiar English words and phrases for less familiar but more compact mathematical symbols fails to materialize because of the obvious but unwonted precision required in their use.

Most of the concepts and operations needed

in a programming language have already been defined

and developed in one or another branch of mathematics.

Therefore, much use can and will be made

of existing notations.

However, since most notations are specialized

to a narrow field of discourse,

a consistent unification must be provided.

For example, separate and conflicting notations

have been developed for the treatment of sets,

logical variables, vectors, matrices, and trees,

all of which may, in the broad universe of discourse

of data processing, occur in a single algorithm.

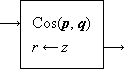

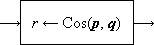

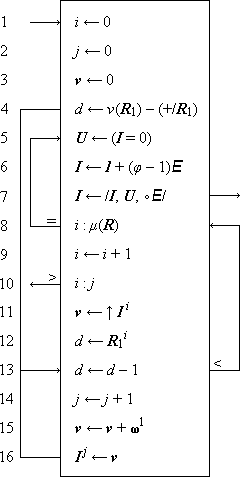

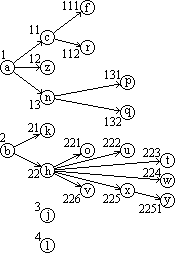

1.2 Programs

A program statement is the specification of some quantity or quantities in terms of some finite operation upon specified operands. Specification is symbolized by an arrow directed toward the specified quantity. thus “y is specified by sin x” is a statement denoted by

y ← sin x.

A set of statements together with a specified order of execution constitutes a program. The program is finite if the number of executions is finite. The results of the program are some subset of the quantities specified by the program. The sequence or order of execution will be defined by the order of listing and otherwise by arrows connecting any statement to its successor. A cyclic sequence of statements is called a loop.

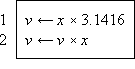

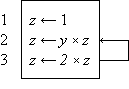

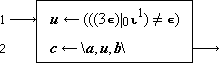

|

| ||

| Program 1.1 Finite Program | Program 1.2 Infinite Program |

Thus Program 1.1 is a program of two statements defining the result v as the (approximate) area of a circle of radius x, whereas Program 1.2 is an infinite program in which the quantity z is specified as (2y)n on the nth execution of the two statement loop. Statements will be numbered on the left for reference.

A number of similar programs may be subsumed under a single more general program as follows. At certain branch points in the program a finite number of alternative statements are specified as possible successors. One of these successors is chosen according to criteria determined in the statement or statements preceding the branch point. These criteria are usually stated as a comparison or test of a specified relation between a specified pair of quantities. A branch is denoted by a set of arrows leading to each of the alternative successors, with each arrow labeled by the comparison condition under which the corresponding successor is chosen. The quantities compared are separated by a colon in the statement at the branch point, and a labeled branch is followed if and only if the relation indicated by the label holds when substituted for the colon. The conditions on the branches of a properly defined program must be disjoint and exhaustive.

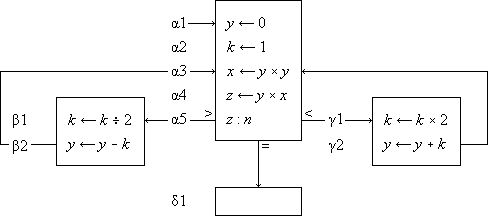

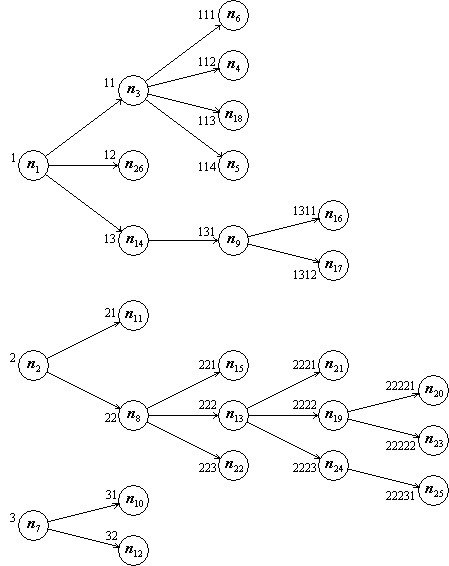

Program 1.3 illustrates the use of a branch point. Statement α5 is a comparison which determines the branch to statements β1, δ1, or γ1, according as z > n, z = n, or z < n. The program represents a crude by effective process for determining x = n2/3 for any positive cube n.

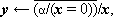

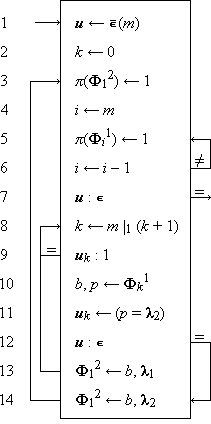

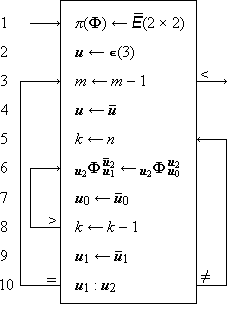

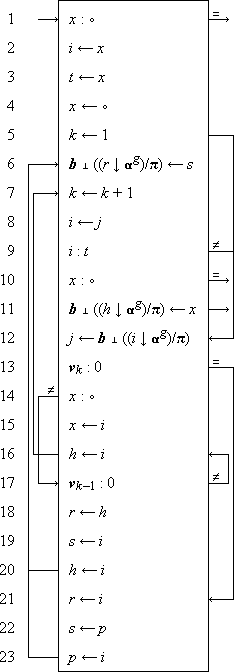

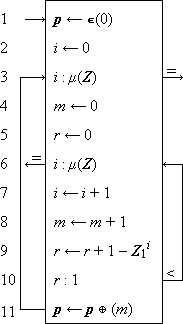

Program 1.3 Program for x = n2/3

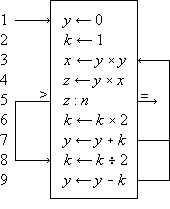

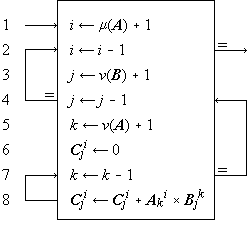

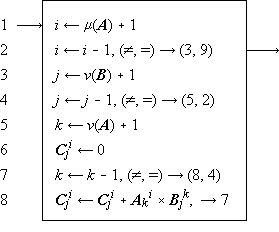

Program 1.4 shows the preceding program reorganized into a compact linear array and introduces two further conventions on the labeling of branch points. The listed successor of a branch statement is selected if none of the labeled conditions is met. Thus statement 6 follows statement 5 if neither of the arrows (to exit or to statement 8) are followed, i.e. if z < n. Moreover, any unlabeled arrow is always followed; e.g., statement 7 is invariably followed by statement 3, never by statement 8.

A program begins at a point indicated by an entry arrow (step 1) and ends at a point indicated by an exit arrow (step 5). There are two useful consequences of confining a program to the form of a linear array: the statements may be referred to by a unique serial index (statement number), and unnecessarily complex organization of the program manifests itself in crossing branch lines. The importance of the latter characteristic in developing clear and comprehensible programs is not sufficiently appreciated.

| |

| Program 1.4 Linear arrangement of Program 1.3 |

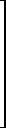

| |

| Program 1.5 Matrix multiplication |

A process which is repeated a number of times is said to be iterated, and a process (such as in Program 1.4) which includes one or more iterated subprocesses is said to be iterative. Program 1.5 shows an iterative process for the matrix multiplication

C ← AB

defined in the usual way as

Cji =  Aki ×

Bjk ,

Aki ×

Bjk ,

|

i = 1,2, …, μ(A), j = 1,2, …, ν(B), |

where the dimensions of an

|

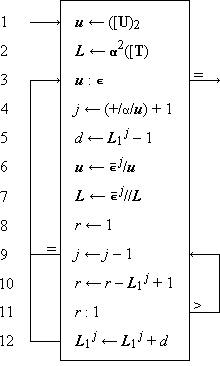

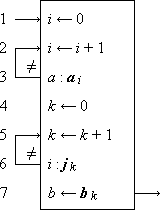

Program 1.5. Steps 1-3 initialize the indices, and the loop 5-7 continues to add successive products to the partial sum until k reaches zero. When this occurs, the process continues through step 8 to decrement j and to repeat the entire summation for the new value of j, providing that it is not zero. If j is zero, the branch to step 10 decrements i and the entire process over j and k is repeated from j = ν(B), providing that i is not zero. If i is zero, the process is complete, as indicated by the exit arrow. |

In all examples used in this chapter, emphasis will be placed on clarity of description of the process, and considerations of efficient execution by a computer or class of computers will be subordinated. These considerations can often be introduced later by relatively routine modifications of the program. For example, since the execution of a computer operation involving an indexed variable is normally more costly than the corresponding operation upon a nonindexed variable, the substitution of a variable s for the variable Cji specified by statement 5 of Program 1.5 would accelerate the execution of the loop. The variable s would be initialized to zero before each entry to the loop and would be used to specify Cji at each termination.

The practice of first setting an index to its maximum value and then decrementing it (e.g., the index k in Program 1.5) permits the termination comparison to be made with zero. Since zero often occurs in comparisons, it is convenient to omit it. Thus, if a variable stands alone at a branch point, comparison with zero is implied. Moreover, since a comparison on an index frequently occurs immediately after it is modified, a branch at the point of modification will denote branching upon comparison of the indicated index with zero, the comparison occurring after modification. Designing programs to execute decisions immediately after modification of the controlling variable results in efficient execution as well as notational elegance, since the variable must be present in a central register for both operations.

Since the sequence of execution of statements is indicated by connecting arrows as well as by the order of listing, the latter can be chosen arbitrarily. This is illustrated by the functionally identical Programs 1.3 and 1.4. Certain principles of ordering may yield advantages such as clarity or simplicity of the pattern of connections. Even though the advantages of a particular organizing principle are not particularly marked, the uniformity resulting from its consistent application will itself be a boon. The scheme here adopted is called the method of leading decisions: the decision on each parameter is placed as early in the program as practicable, normally just before the operations indexed by the parameter. This arrangement groups at the head of each iterative segment the initialization, modification, and the termination test of the controlling parameter. Moreover, it tends to avoid program flaws occasioned by unusual values of the argument.

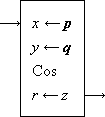

| |

| Program 1.6 Matrix multiplication using leading decisions |

For example, Program 1.6 (which is a reorganization of Program 1.5) behaves properly for matrices of dimension zero, whereas Program 1.5 treats every matrix as if it were of dimension one or greater.

Although the labeled arrow representation of program branches provides a complete and graphic description, it is deficient in the following respects: (1) a routine translation to another language (such as computer code) would require the tracing of arrows, and (2) it does not permit programmed modification of the branches.

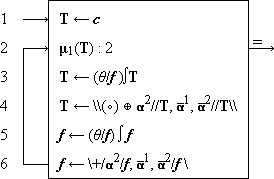

The following alternative form of a branch statement will therefore be used as well:

x : y, r → s.

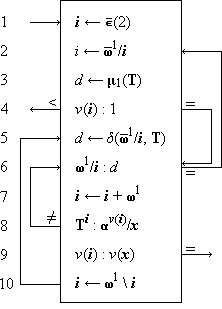

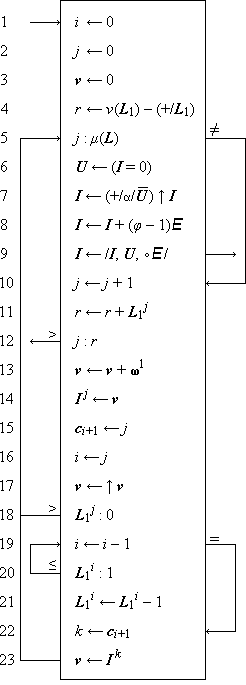

This denotes a branch to statement number si of the program if the relation xr i y holds. The parameters r and s may themselves be defined and redefined in other parts of the program. The null element ∘ will be used to denote the relation which complements the remaining relations r i; in particular, (∘)→(s), or simply →s, will denote an unconditional branch to statement s. Program 1.7 shows the use of these conventions in a reformulation of Program 1.6. More generally, two or more otherwise independent programs may interact through a statement in one program specifying a branch in a second. The statement number occurring in the branch must then be augmented by the name of the program in which the branch is effected. Thus the statement (∘) → Program 2.24 executed in Program 1 causes a branch to step 24 to occur in Program 2.

| |

| Program 1.7 A reformulation of Program 1.6, using an algebraic statement of the branching |

One statement in a program can be modified by another statement which changes certain of its parameters, usually indices. More general changes in statements can be effected by considering the program itself as a vector p whose components are the individual, serially numbered statements. All the operations to be defined on general vectors can then be applied to the statements themselves. For example, the jth statement can be respecified by the ith through the occurrence of the statement pj ← pi.

The interchange of two quantities y and x

(that is, x specifies y and

the original value of y specifies x)

will be denoted by the statement y ↔ x.

1.3 Structure of the language

Conventions

The Summary of Notation at the end of the book summarizes the notation developed in this chapter. Although intended primarily for reference, it supplements the text in several ways. It frequently provides a more concise alternative definition of an operation discussed in the text, and it also contains important but easily grasped extensions not treated explicitly in the text. By grouping the operations into related classes it displays their family relationships.

A concise programming language must incorporate families of operations whose members are related in a systematic manner. Each family will be denoted by a specific operation symbol, and the particular member of the family will be designated by an associated controlling parameter (scalar, vector, matrix, or tree) which immediately precedes the main operation symbol. The operand is placed immediately after the main operation symbol. For example, the operation k ↑ x (left rotation of x by k places) may be viewed as the kth member of the set of rotation operators denoted by the symbol ↑.

Operations involving a single operand

and no controlling parameter

(such as

In interpreting a compound operation such as k ↑ (j ↓ x) it is important to recognize that the operation symbol and its associated controlling parameter together represent an indivisible operation and must not be separated. It would, for example, be incorrect to assume that j ↑ (k ↓ x) were equivalent to k ↑ (j ↓ x), although it can be shown that the complete operations j ↓ and k ↑ do commute, that is k ↑ (j ↓ x) = j ↓ (k ↑ x).

The need for parentheses will be reduced by assuming that compound statements are, except for intervening parentheses, executed from right to left. Thus k ↑ j ↓ x is equivalent to k ↑ (j ↓ x), not to (k ↑ j) ↓ x.

Structured operands such as vectors and matrices, together with a systematic component-by-component generalization of elementary operations, provide an important subordination of detail in the description of algorithms. The use of structured operands will be facilitated by selection operations for extracting a specified portion of an operand, reduction operations for extending an operation (such as logical or arithmetic multiplication) over all components, and permutation operations for reordering components. Operations defined on vectors are extended to matrices: the extended operation is called a row operation if the underlying vector operation is applied to each row of the matrix and a column operation if it is applied to each column. A column operation is denoted by doubling the symbol employed for the corresponding row (and vector) operation.

A distinct typeface will be used for each class of operand as detailed in Table 1.8. Special quantities (such as the prefix vectors ⍺i defined in Sec. 1.7) will be denoted by Greek letters in the appropriate typeface. For mnemonic reasons, an operation closely related to such a special quantity will be denoted by the same Greek letter. For example, ⍺/u denotes the maximum prefix (Sec. 1.10) of the logical vector u. Where a Greek letter is indistinguishable from a Roman, sanserif characters will be used, e.g. E and I for the capitals of epsilon and iota.

| Type of Operand | Representation | ||||||||||||||||||||||||||||||||||||||||||||||

| Printed | Typed | ||||||||||||||||||||||||||||||||||||||||||||||

|

|

|

|||||||||||||||||||||||||||||||||||||||||||||

Table 1.8 Typographic conventions for classes of operands

Literals and variables

The power of any mathematical notation rests largely on the use of symbols to represent general quantities which, in given instances, are further specified by other quantities. Thus Program 1.4 represents a general process which determines x = n2/3 for any suitable value of n. In a specific case, say n = 27, the quantity x is specified as the number 9.

Each operand occurring in a meaningful process must be specified ultimately in terms of commonly accepted concepts. The symbols representing such accepted concepts will be called literals. Examples of literals are the integers, the characters of the various alphabets, punctuation marks, and miscellaneous symbols such as $ and %. The literals occurring in Program 1.4 are 0, 1, 2.

It is important to distinguish clearly between general symbols and literals. In ordinary algebra this presents little difficulty, since the only literals occurring are the integers and the decimal point, and each general symbol employed includes an alphabetic character. In describing more general processes, however, alphabetic literals (such as proper names) also appear. Moreover, in a computer program, numeric symbols (register addresses) are used to represent the variables.

In general, then, alphabetic literals, alphabetic variables, numeric literals, and numeric variables may all appear in a complex process and must be clearly differentiated. The symbols used for literals will be Roman letters (enclosed in quotes when appearing in text) and standard numerals. The symbols used for variables will be italic letters, italic numerals, and boldface letters as detailed in Table 1.8. Miscellaneous signs and symbols when used as literals will be enclosed in quotes in both programs and text.

It is sometimes desirable

(e.g., for mnemonic reasons) to denote

a variable by a string of alphabetic

or other symbols rather than by a single symbol.

The monolithic interpretation of such a string

will be indicated by the tie

used in musical notation, thus:

and

and

may denote the variable “inventory”,

a vector of inventory values,

and a matrix of inventory values,

respectively.

may denote the variable “inventory”,

a vector of inventory values,

and a matrix of inventory values,

respectively.

In the set of alphabetic characters, the space plays a special role. For other sets a similar role is usually played by some one element, and this element is given the special name of null element. In the set of numeric digits, the zero plays a dual role as both null element and numeric quantity. The null element will be denoted by the degree symbol ∘.

In any determinate process, each operand must be specified ultimately in terms of literals. In Program 1.4, for example, the quantity k is specified in terms of known arithmetic operations (multiplication and division) involving the literals 1 and 2. The quantity n, on the other hand, is not determined within the process and must presumably be specified within some larger process which includes Program 1.4. Such a quantity is called an argument of the process.

Domain and range

The class of arguments and the class of results of a given operator are called its domain and range, respectively. Thus the domain and range of the magnitude operation (|x|) are the real numbers and the nonnegative real numbers, respectively.

A variable is classified according

to the range of values it may assume:

it is logical, integral, or numerical,

according as the range is the set of logical variables

(that is, 0 and 1), the set of integers,

or the set of real numbers.

Each of the foregoing classes is clearly

a subclass of each class following it,

and any operation defined on a class clearly

applies to any of its subclasses.

A variable which is nonnumeric

will be called arbitrary.

In the Summary of Notation,

the range and domain of each of the operators

defined is specified in terms of the foregoing classes

according to the conventions shown in Sec. S.1.

1.4 Elementary operations

The elementary operations employed include the ordinary arithmetic operations, the elementary operations of the logical calculus, and the residue and related operations arising in elementary number theory. In defining operations in the text, the symbol ↔ will be used to denote equivalence of the pair of statements between which it occurs.

Arithmetic operations

The ordinary arithmetic operations will be denoted by the ordinary symbols +, –, ×, and ÷ and defined as usual except that the domain and range of multiplication will be extended slightly as follows. If one of the factors is a logical variable (0 or 1), the second may be arbitrary and the product then assumes the value of the second factor or zero according as the value of the first factor (the logical variable) is 1 or 0. Thus if the arbitrary factor is the literal “q”, then| 0 × q = q × 0 = 0 | |

| and | 1 × q = q × 1 = q |

According to the usual custom in ordinary algebra, the multiplication symbol may be elided.

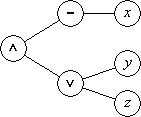

Logical operations

The elementary logical operations and, or, and not will be denoted by ∧, ∨ and an overbar and are defined in the usual way as follows:

| w ← u ∧ v | ↔ | w = 1 if and only if u = 1 and | v = 1, |

| w ← u ∨ v | ↔ | w = 1 if and only if u = 1 or | v = 1, |

w ←  | ↔ | w = 1 if and only if u = 0. |

If x and y are numerical quantities,

then the expression x < y implies

that the quantity x stands in the relation

“less than” to the quantity y.

More generally, if α and β are arbitrary entities

and R is any relation defined on them,

the relational statement

(x > 0) – (x < 0)

(commonly called the sign function or sgn x) assumes the values 1, 0, or –1 according as x is strictly positive, 0, or strictly negative. Moreover, the magnitude function |x| may be defined as |x| = x × sgn x = x × ((x > 0) – (x < 0)).

The relational statement is a useful generalization

of the Kronecker delta, that is

Residues and congruence

For each set of integers n, j, and b, with b > 0, there exists a unique pair of integers q and r such that

n = bq + r, j ≤ r < j + b.

The quantity r is called the j-residue of n modulo b and is denoted by b | j n. For example, 3 |0 9 = 0, 3 |1 9 = 3, and 3 |0 10 = 1. Moreover, if n ≥ 0, then b |0 n is the remainder obtained in dividing n by b and q is the integral part of the quotient. A number n is said to be of even parity if its 0-residue modulo 2 is zero and of odd parity if 2 |0 n = 1.

If two numbers n and m have the same j-residue modulo b, they differ by an integral multiple of b and therefore have the same k-residue module b for any k. If b | j n = b | j m, then m and n are said to be congruent mod b. Congruency is transitive and reflexive and is denoted by

m ≡ n (mod b).

In classical treatments, such as Wright (1939), only the 0-residue is considered. The use of 1-origin indexing (cf. Sec. 1.5) accounts for the interest of the 1-residue.

A number represented in a positional notation (e.g., in a base ten or a base two number system) must, in practice, employ only a finite number of digits. It is therefore often desirable to approximate a number x by an integer. For this purpose two functions are defined:

| 1. | the floor of x (or integral part of x), denoted by ⌊x⌋ and defined as the largest integer not exceeding x, | |

| 2. | the ceiling of x, denoted by ⌈x⌉ and defined as the smallest integer not exceeded by x. |

Thus

| ⌈3.14⌉ = 4, | ⌊3.14⌋ = 3, | ⌊–3.14⌋ = –4, | |||

| ⌈3.00⌉ = 3, | ⌊3.00⌋ = 3, | ⌊–3.00⌋ = –3. |

Clearly ⌈x⌉ =

–⌊–x⌋ and

⌊x⌋

≤ x ≤ ⌈x⌉.

Moreover, n = b⌊n ÷ b⌋

+ b |0 n for all integers n.

Hence the integral quotient

⌊n ÷ b⌋

is equivalent to the quantity q occurring in the definition

of the j-residue for the case j = 0.

1.5 Structured operands

Elementary operations

Any operation defined on a single operand can be generalized to apply to each member of an array of related operands. Similarly, any binary operation (defined on two operands) can be generalized to apply to pairs of corresponding elements of two arrays. Since algorithms commonly incorporate processes which are repeated on each member of an array of operands, such generalization permits effective subordination of detail in their description. For example, the accounting process defined on the data of an individual bank account treats a number of distinct operands within the account, such as account number, name, and balance. Moreover, the over-all process is defined on a large number of similar accounts, all represented in a common format. Such structured arrays of variables will be called structured operands, and extensive use will be made of three types, called vector, matrix, and tree. As indicated in Sec. S.1 of the Summary of Notation, a structured operand is further classified as logical, integral, numerical, or arbitrary, according to the type of elements in contains.

A vector x is the ordered array of elements (x1, x2, x3, …, xν(x)). The variable xi is called the ith component of the vector x, and the number of components, denoted by ν(x) (or simply by ν when the determining vector is clear from context), is called the dimension of x. Vectors and their components will be represented in lower case boldface italics. A numerical vector x may be multiplied by a numerical quantity k to produce the scalar multiple k × x (or kx) defined as the vector z such that z i = k × xi.

All elementary operations defined on individual variables are extended consistently to vectors as component-by-component operations. For example,

| z = x + y | ↔ | zi = xi + yi, | |

| z = x × y | ↔ | zi = xi × yi, | |

| z = x ÷ y | ↔ | zi = xi ÷ yi, | |

| z = ⌈x⌉ | ↔ | zi = ⌈xi⌉ | |

| w = u ∧ v | ↔ | wi = ui ∧ vi, | |

| w = (x < y) | ↔ | wi = (xi < yi). |

Thus if x = (1, 0, 1, 1) and y = (0, 1, 1, 0) then x + y = (1, 1, 2, 1), x ∧ y = (0, 0, 1, 0), and (x < y) = (0, 1, 0, 0).

Matrices

A matrix M is the ordered two-dimensional array of variables

|

The vector

The variable M ji

is called the (i,j)th component

or element of the matrix.

A matrix and its elements will be represented

by upper case boldface italics.

Operations defined on each element of a matrix

are generalized component by component

to the entire matrix.

Thus, if  is any binary operator,

is any binary operator,

P = M

N ↔

Mji

N ↔

Mji

Nji.

Nji.

Index systems

The subscript appended to a vector to designate a single component is called an index, and the indices are normally chosen as a set of successive integers beginning at 1, that is, x = (x1, x2, … xν). It is, however, convenient to admit more general j-origin indexing in which the set of successive integers employed as indices in any structured operand begin with a specified integer j.

The two systems of greatest interest

are the common 1-origin system,

which will be employed

almost exclusively in this chapter,

and the 0-origin system.

The latter system is particularly convenient

whenever the index itself must be represented

in a positional number system and will therefore

be employed exclusively in the treatment

of computer organization in Chapter 2.

1.6 Rotation

The left rotation of a vector x

is denoted by k ↑ x

and specifies the vector obtained by cyclical

left shift of the components of x

by k places.

Thus if

z = k ↑ x ↔ z i = x j, where j = ν|1(i + k).

Right rotation is denoted by k ↓ x and is defined analogously. Thus

z = k ↓ x ↔ z i = x j, where j = ν|1(i – k).

If k = 1, it may be elided. Thus ↑ b = (a, n, d, y, c).

Left rotation is extended to matrices in two ways as follows:

| A ← j ↑ B | ↔ | Ai = ji ↑ Bi | |

C ← k  B B |

↔ | Ci = kj ↑ Bj |

The first operation is an extension

of the basic vector rotation to each row

of the matrix and is therefore called row rotation.

The second operation is the corresponding

column operation and is therefore denoted

by the doubled operation symbol  .

For example, if

.

For example, if

k = (0, 1, 2),

and| B = |

|

| and |

| . |

Right rotation is extended analogously.

1.7 Special vectors

Certain special vectors warrant special symbols.

In each of the following definitions,

the parameter n will be used to specify the dimension.

The interval vector

⍳j(n)

is defined as the vector of integers

beginning with j.

Thus ⍳0(4)=(0, 1, 2, 3),

⍳1(4)=(1, 2, 3, 4),

and ⍳–7(5)=

(–7, –6, –5, –4, –3).

Four types of logical vectors are defined as follows.

The jth unit vector

∊ j(n)

has a one in the jth position, that is,

(∊ j(n))k

= (k = j).

The full vector ∊(n)

consists of all ones.

The vector consisting of all zeros is denoted

both by 0 and by  (n).

The prefix vector of weight j is denoted by

⍺ j(n)

and possesses ones in the first k positions,

where k is the lesser

of j and n.

The suffix vector

⍵ j(n)

is defined analogously.

Thus ∊2(3) = (0, 1, 0),

∊(4) = (1, 1, 1, 1),

⍺3(5) = (1, 1, 1, 0, 0),

⍵3(5) = (0, 0, 1, 1, 1), and

⍺7(5) =

⍺5(5) = (1, 1, 1, 1, 1).

Moreover,

⍵ j(n) =

j ↑ ⍺ j(n),

and ⍺ j(n) =

j ↓ ⍵ j(n).

(n).

The prefix vector of weight j is denoted by

⍺ j(n)

and possesses ones in the first k positions,

where k is the lesser

of j and n.

The suffix vector

⍵ j(n)

is defined analogously.

Thus ∊2(3) = (0, 1, 0),

∊(4) = (1, 1, 1, 1),

⍺3(5) = (1, 1, 1, 0, 0),

⍵3(5) = (0, 0, 1, 1, 1), and

⍺7(5) =

⍺5(5) = (1, 1, 1, 1, 1).

Moreover,

⍵ j(n) =

j ↑ ⍺ j(n),

and ⍺ j(n) =

j ↓ ⍵ j(n).

A logical vector of the form ⍺h(n) ∧ ⍵ j(n) is called an infix vector. An infix vector can also be specified in the form j ↓ ⍺k(n), which displays its weight and location more directly.

An operation such as x ∧ y is defined only for compatible vectors x and y, that is, for vectors of like dimension. Since this compatibility requirement can be assumed to specify implicitly the dimension of one of the operands, elision of the parameter n may be permitted in the notation for the special vectors. Thus, if y = (3, 4, 5, 6, 7), the expression ∊ × y and ∊ j × y imply that the dimensions of ∊ and ∊ j are both 5. Moreover, elision of j will be permitted for the interval vector ⍳ j(n) (or ⍳ j), and for the residue operator | j when j is the index origin in use.

It is, of course, necessary to specify the index origin in use at any given time. For example, the unit vector ∊3(5) is (0, 0, 1, 0, 0) in a 1-origin system and (0, 0, 0, 1, 0) in a 0-origin system, even though the definition (that is, (∊ j(n))k = (k = j)) remains unchanged. The prefix and suffix vectors are, of course, independent of the index origin. Unless otherwise specified, 1-origin indexing will be assumed.

The vector ∊(0) is a vector

of dimension zero and will be called

the null vector.

It should not be confused

with the special null element ∘.

1.8 Reduction

An operation (such as summation) which is applied

to all components of a vector to produce a result

of a simpler structure is called a reduction.

The  -reduction

of a vector x is denoted by

-reduction

of a vector x is denoted by

/x

and defined as

/x

and defined as

z ←

/x

↔ z =

(… ((x1

/x

↔ z =

(… ((x1

x2)

x2)

x3)

x3)

…)

…)

xν),

xν),

where  is any binary operator with a suitable domain.

Thus +/x is the sum,

×/x is the product,

and ∨/x

is the logical sum of the components

of a vector x.

For example, ×/⍳1(5)

= 1 × 2 × 3 × 4 × 5,

×/⍳1(n) = n!,

and +/⍳1(n) =

n(n + 1)/2.

is any binary operator with a suitable domain.

Thus +/x is the sum,

×/x is the product,

and ∨/x

is the logical sum of the components

of a vector x.

For example, ×/⍳1(5)

= 1 × 2 × 3 × 4 × 5,

×/⍳1(n) = n!,

and +/⍳1(n) =

n(n + 1)/2.

As a further example, De Morgan’s law

may be expressed as ∧/u

=  ,

where u is

a logical vector of dimension two.

Moreover, a simple inductive argument (Exercise 1.10)

shows that the foregoing expression is the

valid generalization of De Morgan’s law

for a logical vector u

of arbitrary dimension.

,

where u is

a logical vector of dimension two.

Moreover, a simple inductive argument (Exercise 1.10)

shows that the foregoing expression is the

valid generalization of De Morgan’s law

for a logical vector u

of arbitrary dimension.

A relation R incorporated

into a relational statement (xRy)

becomes, in effect, an operator on the variables

x and y.

Consequently, the reduction R/x

can be defined in a manner analogous to that of

( /x),

that is,

/x),

that is,

R/x = (… ((x1 R x2) R x3) R …) R xν).

The parentheses now imply relational statements as well as grouping. The relational reductions of practical interest are ≠/u, and =/u, the exclusive-or and the equivalence reduction, respectively.

The inductive argument of Exercise 1.10 shows that ≠/u = 2 |0 (+/u). For example, if u = (1,0,1,1,0), then

| ≠/u | = | ((((1 ≠ 0) ≠ 1) ≠ 1) ≠ 0) | |

| = | (((1 ≠ 1) ≠ 1) ≠ 0) | ||

| = | ((0 ≠ 1) ≠ 0) | ||

| = | (1 ≠ 0) = 1, | ||

and 2 |0 (+/u) = 2 |0 3 = 1.

Similarly, =/u =  ,

and as a consequence,

,

and as a consequence,

≠/u =  ,

,

a useful companion to De Morgan’s law.

To complete the system it is essential to define

the value of

/∊(0),

the reduction of the null vector of dimension zero,

as the identity element of the operator

or relation

/∊(0),

the reduction of the null vector of dimension zero,

as the identity element of the operator

or relation  .

Thus +/∊(0) = ∨/∊(0) = 0,

and ×/∊(0) =

∧/∊(0) = 1.

.

Thus +/∊(0) = ∨/∊(0) = 0,

and ×/∊(0) =

∧/∊(0) = 1.

A reduction operation is extended

to matrices in two ways.

A row reduction of a matrix X

by an operator  is denoted by

is denoted by

y ←

/X

/X

and specifies a vector y of dimension

μ(X) such that

yi =

/Xi.

A column reduction of X is denoted

by z ←

/Xi.

A column reduction of X is denoted

by z ←

//X

and specifies a vector z

of dimension ν(X)

such that z j =

//X

and specifies a vector z

of dimension ν(X)

such that z j =

//X j.

//X j.

For example, if

| U = |  |

1 0 1 0 |  |

| 0 0 1 1 | |||

| 1 1 1 0 |

then +/U =

1.9 Selection

Compression

The effective use of structured operands depends not only on generalized operations but also on the ability to specify and select certain elements or groups of elements. The selection of single elements can be indicated by indices, as in the expressions vi, M i, M j, and M i j. Since selection is a binary operation (i.e., to select or not to select), more general selection is conveniently specified by a logical vector, each unit component indicating selection of the corresponding component of the operand.

The selection operation defined on an arbitrary

vector a and a compatible

(i.e., equal in dimension)

logical vector u is denoted by

c ← u/a

and is defined as follows:

the vector c is obtained from

a by suppressing from

a each component

ai

for which ui = 0.

The vector u is said to compress

the vector a.

Clearly ν(c) = +/u.

For example, if u =

Row compression of a matrix,

denoted by u/A,

compresses each row vector Ai

to form a matrix of dimension

|

|

| u/v//A = v//u/A = |  |

|

|

. |

It is clear that row compression suppresses columns corresponding to zeros of the logical vector and that column compression suppresses rows. This illustrates the type of confusion in nomenclature which is avoided by the convention adopted in Sec. 1.3: an operation is called a row operation if the underlying operation from which it is generalized is applied to the row vectors of the matrix, and a column operation if it is applied to columns.

|

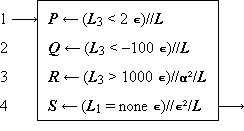

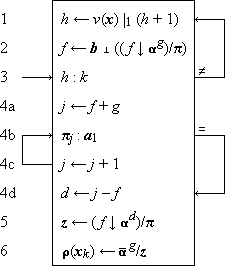

Example 1.1. A bank makes a quarterly review of accounts to produce the following four lists:

The ledger may be described by a matrix

with column vectors L1, L2, and L3, representing names, account numbers, and balances, respectively, and with row vectors L1, L2, …, Lm, representing individual accounts. An unassigned account number is identified by the word “none” in the name position. The four output lists will be denoted by the matrices P, Q, R, and S, respectively. They can be produced by Program 1.9. |

|

Legend |

Program 1.9 Selection on bank ledger L (Example 1.1)

|

Program 1.9. Since L3 is the vector of balances, and 2∊ is a compatible vector each of whose components equals two, the relational statement (L3 < 2∊) defines a logical vector having unit components corresponding to those accounts to be included in the list P. Consequently, the column compression of step 1 selects the appropriate rows of L to define P. Step 2 is similar, but step 3 incorporates an additional row compression by the compatible prefix vector ⍺² = (1,1,0) to select columns one and two of L. Step 4 represents the comparison of the name (in column L1) with the literal “none”, the selection of each row which shows agreement, and the suppression of all columns but the second. The expression “none ∊” occurring in step 4 illustrates the use of the extended definition of multiplication. |

Mesh, mask, and expansion

A logical vector u

and the two vectors

a =

/c and

b = u/c,

obtained by compressing a vector c,

collectively determine the vector c.

The operation which specifies c

as a function of a, b, and

u is called a mesh

and is defined as follows:

If a and b are

arbitrary vectors and if u

is a logical vector such that +/

/c and

b = u/c,

obtained by compressing a vector c,

collectively determine the vector c.

The operation which specifies c

as a function of a, b, and

u is called a mesh

and is defined as follows:

If a and b are

arbitrary vectors and if u

is a logical vector such that +/ = ν(a) and +/u

= ν(b), then the mesh of

a and b on

u is denoted by

= ν(a) and +/u

= ν(b), then the mesh of

a and b on

u is denoted by

/c

= a and u/c

= b.

The mesh operation is equivalent to choosing successive

components of c from a

or b according as the successive components

of u are 0 or 1.

If, for example, a =

/c

= a and u/c

= b.

The mesh operation is equivalent to choosing successive

components of c from a

or b according as the successive components

of u are 0 or 1.

If, for example, a =

(a) |

(b) | ||||||||||||||||||

Legend |

Program 1.10 Interfiling program

Program 1.10a (which describes the merging of

the vectors a and b,

with the first and every third component thereafter

chosen from a) can be described

alternatively as shown in Program 1.10b.

Since ⍳1 =

Mesh operations on matrices are defined analogously, row mesh and column mesh being denoted by single and double reverse virgules, respectively.

The catenation of vectors

y

y  …

…

z

z

x  y

y  …

…

z =

(x1, x2, …,

xν(x),

y1, y2, …,

zν(z)).

z =

(x1, x2, …,

xν(x),

y1, y2, …,

zν(z)).

Catenation is clearly associative and for two vectors x and y it is a special case of the mesh \x, u, y\ in which u is a suffix vector.

In numerical vectors (for which addition of two vectors is defined), the effect of the general mesh operation can be produced as the sum of two meshes, each involving one zero vector. Specifically,

| \x, u, y\ | = | \x, u, 0\ + \0, u, y\ |

| = | \0,  , x\ +

\0, u, y\. , x\ +

\0, u, y\. |

The operation \0, u, y\

proves very useful in numerical work and will be called

expansion of the vector y,

denoted by u\y.

Compression of u\y

by u and by  clearly yields y and 0, respectively.

Moreover, any numerical vector x

can be decomposed by a compatible vector

u according to the relation

clearly yields y and 0, respectively.

Moreover, any numerical vector x

can be decomposed by a compatible vector

u according to the relation

x =

\

\ /x +

u\u/x.

/x +

u\u/x.

The two terms are vectors of the same dimension which have no nonzero components in common. Thus if u = (1, 0, 1, 0, 1), the decomposition of x appears as

x = (0, x2, 0, x4, 0) + (x1, 0, x3, 0, x5).

Row expansion and column expansion of matrices are defined and denoted analogously. The decomposition relations become

X =

\

\ /X +

u\u/X,

/X +

u\u/X,

and

X =

\\

\\ //X +

u\\u//X.

//X +

u\\u//X.

The mask operation is defined formally as follows:

c ←

/a, u, b/ ↔

/c =

/c =

/a, and

u/c = u/b.

/a, and

u/c = u/b.

The vectors c, a, u, and b are clearly of a common dimension and ci = ai or bi according as ui = 0 or ui = 1. Moreover, the compress, expand, mask, and mesh operations on vectors are related as follows:

| /a, u, b/ | = | \ /a,

u, u/b\, /a,

u, u/b\, |

| \a, u, b\ | = | / \a,

u, u\b/. \a,

u, u\b/. |

Analogous relations hold for the row mask and row mesh and for the column mask and column mesh.

Certain selection operations are controlled by logical matrices rather than by logical vectors. The row compression U/A selects elements of A corresponding to the nonzero elements of U. Since the nonzero elements of U may occur in an arbitrary pattern, the result must be construed as a vector rather than a matrix. More precisely, U/A denotes the catenation of the vectors U i/Ai obtained by row-by-row compression of A by U. The column compression U//A denotes the catenation of the vectors Uj/Aj. If, for example

| U = |  |

0 1 0 1 1 |  |

| 1 1 0 0 0 | |||

| 0 1 1 0 0 |

then

U/A =

(A21,

A41,

A51,

A12,

A22,

A23,

A33),

and

U//A =

(A12,

A21,

A22,

A23,

A33,

A41,

A51).

Compression by the full matrix E

(defined by  = 0)

produces either a row list

(E/A)

or a column list

(E//A)

of the matrix A.

Moreover, a numerical matrix X

can be represented jointly by the logical matrix

U and the row list

U/X

(or the column list U//X),

where U = (X ≠ 0).

If the matrix X is sparse

(i.e., the components are predominantly zero),

this provides a compact representation

which may reduce the computer storage

required for X.

= 0)

produces either a row list

(E/A)

or a column list

(E//A)

of the matrix A.

Moreover, a numerical matrix X

can be represented jointly by the logical matrix

U and the row list

U/X

(or the column list U//X),

where U = (X ≠ 0).

If the matrix X is sparse

(i.e., the components are predominantly zero),

this provides a compact representation

which may reduce the computer storage

required for X.

The compression operations controlled

by matrices also generate a group of

corresponding mesh and mask operations

as shown in Sec. S.9.

1.10 Selection vectors

The logical vector u involved in selection operations may itself arise in various ways. It may be a prefix vector ⍺ j, a suffix ⍵ j, or an infix (i ↓ ⍺ j); the corresponding compressed vectors ⍺ j/x, ⍵ j/x, and (i ↓ ⍺ j)/x are called a prefix, suffix, and infix of x, respectively.

Certain selection vectors arise as functions of other vectors, e.g., the vector (x ≥ 0) can be used to select all nonnegative components of x, and (b ≠ *∊) serves to select all components of b which are not equal to the literal “*”. Two further types are important: the selection of the longest unbroken prefix (or suffix) of a given logical vector, and the selection of the set of distinct components occurring in a vector. The first is useful in left (or right) justification or in a corresponding compression intended to eliminate leading or trailing “filler components” of a vector (such as left zeros in a number or right spaces in a short name).

For any logical vector u, the maximum prefix of u is denoted by ⍺/u and defined as follows:

u ← ⍺/u ↔ v = ⍺ j

where j is the maximum value for which ∧/(⍺ j/u) = 1. The maximum suffix is denoted by ⍵/u and is defined analogously. If, for example, u = (1, 1, 1, 0, 1, 1, 0, 0, 1, 1), then ⍺/u = (1, 1, 1, 0, 0, 0, 0, 0, 0, 0), ⍵/u = (0, 0, 0, 0, 0, 0, 0, 0, 1, 1), +/⍺/u = 3, and +/⍵/u = 2.

The leading zeros of a numerical vector x can clearly be removed either by compression:

or by left justification (normalization):

z ← (+/⍺/(x = 0)) ↑ x.

The extension of the maximum prefix operation

to the rows of a logical matrix U

is denoted by ⍺/U

and defined as the compatible logical matrix

V, such that

V i

= ⍺/U i.

The corresponding maximum column prefix operation

is denoted by ⍺//U.

Right justification of a numerical matrix

X is achieved by the rotation

k ↓ X,

where k = +/⍵/(X = 0),

and top justification is achieved

by the rotation

((+//⍺//(X = 0)

X

(see Sec. S.6.)

X

(see Sec. S.6.)

A vector whose components are all distinct will be called an ordered set. The forward set selector on b is a logical vector denoted by σ/b and defined as follows: the statement v ← σ/b implies that vj = 1 if and only if bj differs from all preceding components of b. Hence v/b is a set which contains all distinct components of b, and +/v/⍳ is a minimum. For example, if c = (C, a, n, a, d, a), then (σ/c)/c = (C, a, n, d) is a list of the distinct letters in c in order of occurrence. Clearly (σ/b)/b = b if and only if b is a set.

The backward set selector τ/b

is defined analogously

(e.g., (τ/c)/c =

1.11 The generalized matrix product

The ordinary matrix product of matrices X and Y is commonly denoted by XY and defined as follows:

Z ← XY ↔

Z j i =

Xki

×

Yjk,

Xki

×

Yjk, |

|

i = 1, 2, …, μ(X) j = 1, 2, …, ν(Y). |

(XY)j i = +/(X i × Yj).

This formulation emphasizes the fact that

matrix multiplication incorporates two elementary operations

(+, ×) and suggests that they be displayed explicitly.

The ordinary matrix product will therefore be written as

X  Y.

Y.

More generally, if  1

and

1

and  2

are any two operators

(whose domains include the relevant operands),

then the generalized matrix product

2

are any two operators

(whose domains include the relevant operands),

then the generalized matrix product

is defined as follows:

is defined as follows:

( |

|

i = 1, 2, …, μ(X) j = 1, 2, …, ν(Y). |

| and |

|

|

|

|

|

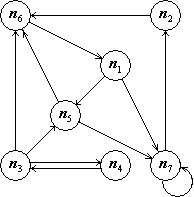

The generalized matrix product and the selection operations together provide an elegant formulation in several established areas of mathematics. A few examples will be chosen from two such areas, symbolic logic and matrix algebra.

In symbolic logic, De Morgan’s laws

(∧/u =  and

=/u =

and

=/u =  )

can be applied directly to show that

)

can be applied directly to show that

U  V

=

V

=  .

.

In matrix algebra, the notion of partitioning a matrix into submatrices of contiguous rows and columns can be generalized to an arbitrary partitioning specified by a logical vector u. The following easily verifiable identities are typical of the useful relations which result:

X  Y Y |

= | ( /X) /X)  (

( //Y) +

(u/X) //Y) +

(u/X)  (u//Y),

(u//Y),

| |||

u/(X  Y) Y) |

= | X  (u/Y), (u/Y), |

|||

u//(X  Y) Y) |

= | (u//X)  Y. Y. |

The first identity depends on the commutativity and associativity of the operator + and can clearly be generalized to other associative commutative operators, such as ∧, ∨, and ≠.

The generalized matrix product applies directly

(as does the ordinary matrix product

X  Y)

to vectors considered as row (that is, 1 × n)

or as column matrices. Thus:

Y)

to vectors considered as row (that is, 1 × n)

or as column matrices. Thus:

z ← X  y y |

↔ | z i | = |

1/(X i 1/(X i

2 y), 2 y), |

|

z ← y  X X |

↔ | z j | = |

1/(y 1/(y

2 Xj ), 2 Xj ), |

|

z ← y  x x |

↔ | z | = |

1/(y 1/(y

2 x). 2 x). |

The question of whether a vector enters a given operation

as a row vector or as a column vector is normally settled

by the requirement of conformability,

and no special indication is required.

Thus y enters as a column vector

in the first of the preceding group of definitions

and as a row vector in the last two.

The question remains, however, in the case

of the two vector operands, which may be considered

with the pre-operand either as a row

(as in the scalar product

y  x)

or as a column.

The latter case produces a matrix Z

and will be denoted by

x)

or as a column.

The latter case produces a matrix Z

and will be denoted by

Z ←

y  2 x,

2 x,

where Zji =

yi

2

xj,

μ(Z) = ν(y), and

ν(Z) = ν(x).[b]

For example, if each of the vectors indicated

is of dimension three, then

2

xj,

μ(Z) = ν(y), and

ν(Z) = ν(x).[b]

For example, if each of the vectors indicated

is of dimension three, then

|

| ||||||||||||||||

| |||||||||||||||||

1.12 Transpositions

Since the generalized matrix product is defined on columns of the post-operand and rows of the pre-operand, convenient description of corresponding operations on the rows of the post-operand and columns of the pre-operand demands the ability to transpose a matrix B, that is, to specify a matrix C such that Ci j = Bji. In ordinary matrix algebra this type of transposition suffices, but in more general work transpositions about either diagonal and about the horizontal and the vertical are also useful. Each of these transpositions of a matrix B is denoted by a superior arrow whose inclination indicates the axis of the transposition. Thus:

C ←  |

Ci j = Bj i |  |

i = 1, 2, …, μ(B) j = 1, 2, …, ν(B) |

||

C ←  |  | ||||

C ←  |  | ||||

C ←  |  |

For a vector x, either  or

or  will denote reversal of the order

of the components.

For ordinary matrix transposition (that is,

will denote reversal of the order

of the components.

For ordinary matrix transposition (that is,  ),

the commonly used notation

),

the commonly used notation  will also be employed.

will also be employed.

Since transpositions can effect any one or more

of three independent alternatives

(i.e., interchange of row and column indices

or reversal of order of row or of column indices),

repeated transpositions can produce

eight distinct configurations.

There are therefore seven distinct

transformations possible;

all can be generated by any pair

of transpositions having nonperpendicular

axes.[c]

1.13 Special logical matrices

Certain of the special logical vectors introduced in Sec. 1.7 have useful analogs in logical matrices. Dimensions will again be indicated in parentheses (with the column dimension first) and may be elided whenever the dimension is determined by context. If not otherwise specified, a matrix is assumed to be square.

Cases of obvious interest are the full matrix

E(m × n),

defined by  (m × n) = 0,

and the identity matrix

I(m × n),

defined by Iji

= (i = j).

More generally, superdiagonal matrices

kI(m × n)

are defined such that

kIji(m × n)

= (j = i + k), for k ≥ 0.

Clearly 0I =

I.

Moreover, for square matrices,

hI

(m × n) = 0,

and the identity matrix

I(m × n),

defined by Iji

= (i = j).

More generally, superdiagonal matrices

kI(m × n)

are defined such that

kIji(m × n)

= (j = i + k), for k ≥ 0.

Clearly 0I =

I.

Moreover, for square matrices,

hI

kI =

(h + k)I.

kI =

(h + k)I.

Four triangular matrices will be defined, the geometrical symbols employed for each indicating the (right-angled isosceles) triangular area of the m × n rectangular matrix which is occupied by ones. Thus

C ←  (m × n) (m × n) |

↔ | C j i |  |

= (i + j ≤ min(m, n)) | for i = 1, 2, …, m and j = 1, 2, …, n. |

|

C ←  (m × n) (m × n) |

↔ |  | ||||

C ←  (m × n) (m × n) |

↔ |  | ||||

C ←  (m × n) (m × n) |

↔ |  |

The use of the matrices E and

I

will be illustrated briefly.

The relation u  v =

v =

v)

v)

U  V

= (2E) |0

(U

V

= (2E) |0

(U  V);

V);

the trace of a square numerical matrix X

may be expressed as t =

+/I/X.

The triangular matrices are employed in the succeeding section.

1.14 Polynomials and positional number systems

Any positional representation of a number

n in a base b number system

can be considered as a numerical vector x

whose base b value is the quantity

n = w

x,

where the weighting vector w

is defined by w =

(bν(x)–1,

bν(x)–2, …

b2, b1, 1).

More generally, x may represent

a number in a mixed-radix system in which

the successive radices (from high to low order)

are the successive components of a

radix vector y.

x,

where the weighting vector w

is defined by w =

(bν(x)–1,

bν(x)–2, …

b2, b1, 1).

More generally, x may represent

a number in a mixed-radix system in which

the successive radices (from high to low order)

are the successive components of a

radix vector y.

The base y value of

x is a scalar denoted by

y ⊥ x

and defined as the scalar product

y ⊥ x

= w  x,

where w =

x,

where w =

y

is the weighting vector.

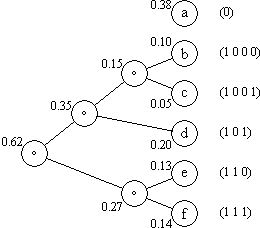

For example, if y = (7, 24, 60, 60)

is the radix vector for the common temporal system

of units,

and if x = (0, 2, 1, 18)

represents elapsed time in days, hours, minutes, and seconds,

then

y

is the weighting vector.

For example, if y = (7, 24, 60, 60)

is the radix vector for the common temporal system

of units,

and if x = (0, 2, 1, 18)

represents elapsed time in days, hours, minutes, and seconds,

then

t = w

x =

(86400, 3600, 60, 1)

x =

(86400, 3600, 60, 1)  (0, 2, 1, 18) = 7278

(0, 2, 1, 18) = 7278

is the elapsed time in seconds, and the weighting vector w is obtained as the product

y = y = |

|

0 1 1 1 |  |

|

|

7 |  |

= |  |

×/(24, 60, 60) |  |

= |  |

86400 |  | |

| 0 0 1 1 | 24 | ×/(60, 60) | 3600 | |||||||||||||

| 0 0 0 1 | 60 | ×/(60) | 60 | |||||||||||||

| 0 0 0 0 | 60 | ×/∊(0) | 1 |

If b is any integer, then the value of x in the fixed base b is denoted by (b∊) ⊥ x. For example, (2∊) ⊥ ⍺2(5) = 24. More generally, if y is any real number, then (y∊) ⊥ x is clearly a polynomial in y with coefficients x1, x2, … xν, that is,

(y∊) ⊥ x = x1 yν(x)–1 + … + xν–1 y + xν .

Writing the definition of y ⊥ x in the form

y ⊥ x

= (

y)

y)  x

x

exhibits the fact that the operation ⊥ is of the double operator type. Its use in the generalized matrix product therefore requires no secondary scan operator. This will be indicated by a null placed over the symbol ⊥. Thus

Z ← X

Y ↔

Z j i =

X i ⊥

Y j.

Y ↔

Z j i =

X i ⊥

Y j.

For example, (y∊)

X

represents a set of polynomials in y

with coefficients

X1,

X2, …,

Xν, and

Y

X

represents a set of polynomials in y

with coefficients

X1,

X2, …,

Xν, and

Y  x

represents a set of evaluations

of the vector x in a set of bases

Y1,

Y2, …,

Yμ.

x

represents a set of evaluations

of the vector x in a set of bases

Y1,

Y2, …,

Yμ.

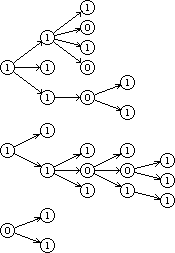

1.15 Set operations

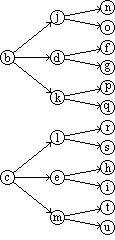

In conventional treatments, such as Jacobson (1951) or Birkhoff and MacLane (1941), a set is defined as an unordered collection of distinct elements. A calculus of sets is then based on such elementary relations as set membership and on such elementary operations as set intersection and set union, none of which imply or depend on an ordering among members of a set. In the present context it is more fruitful to develop a calculus of ordered sets.

A vector whose components are all distinct has been called (Sec. 1.10) an ordered set and (since no other types are to be considered) will hereafter be called a set. In order to provide a closed system, all of the “set operations” will, in fact, be defined on vectors. However, the operations will, in the special case of sets, be analogous to classical set operations. The following vectors, the first four of which are sets, will be used for illustration throughout.

| t = (t, e, a) | |

| a = (a, t, e) | |

| s = (s, a, t, e, d) | |

| d = (d, u, s, k) | |

| n = (n, o, n, s, e, t) | |

| r = (r, e, d, u, n, d, a, n, t) |

A variable z is a member of a vector x if z = xi for some i. Membership is denoted by z ε x. A vector x includes a vector y (denoted by either x ⊇ y or y ⊆ x) if each element yi is a member of x. If both x ⊇ y and x ⊆ y, then x and y are said to be similar. Similarity of x and y is denoted by x ≡ y. For example, t ⊆ s, t ⊆ r, t ⊆ a, a ⊆ t, t ≡ a, and t ≢ r. If x ⊆ y and x ≢ y, then x is strictly included in y. Strict inclusion is denoted by x ⊂ y.

The characteristic vector of x on y is a logical vector denoted by ∊yx, and defined as follows:

u = ∊yx ↔ ν(u) = ν(y), and uj = (yj ε x).

For example, ∊st = (0, 1, 1, 1, 0), ∊ts = (1, 1, 1), ∊sd = (1, 0, 0, 0, 1), ∊ds = (1, 0, 1, 0), and ∊nr = (1, 0, 1, 0, 1, 1).

The intersection of y with x is denoted by y ∩ x, and defined as follows:

y ∩ x = ∊yx/y.

For example, s ∩ d = (s, d), d ∩ s = (d, s), s ∩ r = (a, t, e, d), and r ∩ s = (e, d, d, a, t). Clearly, x ∩ y ≡ y ∩ x, although x ∩ y is not, in general, equal to y ∩ x, since the components may occur in a different order and may be repeated a different number of times. The vector x ∩ y is said to be ordered on x. Thus a is ordered on s. If x and y contain no common elements (that is, (x ∩ y) = ∊(0)), they are said to be disjoint.

The set difference of y and x is denoted by y ∆ x and is defined as follows:

y ∆ x =

yx/y.

yx/y.

Hence y ∆ x is obtained

from y by suppressing those components

which belong to x.

For example,

st

= (1, 0, 0, 0, 1) and

s ∆ t = (s, d).

Moreover,

st

= (1, 0, 0, 0, 1) and

s ∆ t = (s, d).

Moreover,

ts

= (0, 0, 0) and

t ∆ s = ∊(0).

ts

= (0, 0, 0) and

t ∆ s = ∊(0).

The union of y and x

is denoted by y ∪ x

and defined as follows:[d]

y ∪ x =

y  (x ∆ y).

For example, s ∪ d =

(s, a, t, e, d, u, k),

d ∪ s =

(d, u, s, k, a, t, e),

s ∪ a =

s ∪ t = s,

and n ∪ t =

(n, o, n, s, e, t, a).

In general,

x ∪ y ≡

y ∪ x, and

x ≡

(x ∩ y) ∪

(x ∆ y).

If x and y

are disjoint,

their union is equivalent to their catenation,

that is, x ∩ y

= ∊(0) implies that

x ∪ y =

x

(x ∆ y).

For example, s ∪ d =

(s, a, t, e, d, u, k),

d ∪ s =

(d, u, s, k, a, t, e),

s ∪ a =

s ∪ t = s,

and n ∪ t =

(n, o, n, s, e, t, a).

In general,

x ∪ y ≡

y ∪ x, and

x ≡

(x ∩ y) ∪

(x ∆ y).

If x and y

are disjoint,

their union is equivalent to their catenation,

that is, x ∩ y

= ∊(0) implies that

x ∪ y =

x  y.

y.

In the foregoing development, the concepts of inclusion and similarity are equivalent to the concepts of inclusion and equality in the conventional treatment of (unordered) sets. The remaining definitions of intersection, difference, and union differ from the usual formulation in that the result of any of these operations on a pair of ordered sets is again an ordered set. With respect to similarity, these operations satisfy the same identities as do the analogous conventional set operations on unordered sets with respect to equality.

The forward selection σ/b and the backward selection τ/b defined in Sec. 1.10 can both be used to reduce any vector b to a similar set, that is,

(σ/b)/b ≡ (τ/b)/b ≡ b.

Moreover, if f = (σ/x)/b, g = (σ/y)/y, and h = (σ/z)/z, then x = y ∩ z implies that f = g ∩ h, and x = y ∪ z implies that f = g ∪ h.

The unit vector ∊ j(n) will be recognized as a special case of the characteristic vector ∊yx in which x consists of the single component j, and y = ⍳h(n), where h is the index origin in use. In fact, the notation ∊ jih can be used to make explicit the index origin h assumed for ∊ j.

If z is any vector of dimension two such that z1 ε x and z2 ε y, then z is said to belong to the Cartesian product of x and y. Thus if x = (a, b, c) and y = (0, 1), the rows of the matrix

| A = |  |

a 0 |  | |

| a 1 | ||||

| b 0 | ||||

| b 1 | ||||

| c 0 | ||||

| c 1 |

are a complete list of the vectors z

belonging to the product set of x

and y.

The matrix A will be called

the Cartesian product of x and

y and will be denoted

x  y.

y.

The foregoing definition by example will be formalized

in a more general way that admits the Cartesian product

of several vectors (that is,

u  v

v  …

…  y)

which need not be sets, and which specifies

a unique ordering of the rows of the resulting matrix.

Consider a family of vectors

x1, x2,

…, xs

of dimensions d1, d2,

…, ds. Then

y)

which need not be sets, and which specifies

a unique ordering of the rows of the resulting matrix.

Consider a family of vectors

x1, x2,

…, xs

of dimensions d1, d2,

…, ds. Then

A ←

x1  x2

x2  …

…  xs

↔ A1 + d ⊥ (k – ∊)

= (x1k1,

x2k2, …,

xsks),

xs

↔ A1 + d ⊥ (k – ∊)

= (x1k1,

x2k2, …,

xsks),

for all vectors k such that 1 ≤ ki ≤ di. Clearly, ν(A) = s, and μ(A) = ×/d. As illustrated by Table 1.11, the rows of the Cartesian product A are not distinct if any one of the vectors xi is not a set.

|

|

Table 1.11 The Cartesian product

A = x1

x2

x2

x3

x3

If the vectors xi

are all of the the same dimension,

they may be considered as the columns of a matrix X,

that is, Xi = xi.

The product x1

x2

x2

…

…

xs =

X1

xs =

X1

X2

X2

…

…

Xs

may then be defined by

Xs

may then be defined by  /X,

or alternatively by

/X,

or alternatively by  //Y,

where Y is the transpose of X.

For example, if

//Y,

where Y is the transpose of X.

For example, if

X =

⍳0(2)

∊(3) = ∊(3) = |

|

0 0 0 |  |

, |

| 1 1 1 |

then  /X

is the matrix of arguments of the truth table for three variables.

/X

is the matrix of arguments of the truth table for three variables.

1.16 Ranking

The rank or index of an element

c ε b

is called the b index of c

and is defined as the smallest value of i

such that c = bi.

To establish a closed system, the b

index of any element

a  b

will be defined as the null characer ∘.

The b index of any element c

will be denoted by b ⍳ c;

if necessary, the index origin in use will be indicated

by a subscript appended to the operator ⍳.

Thus, if b = (a, p, e),

b ⍳0 p = 1, and

b ⍳1 p = 2.

b

will be defined as the null characer ∘.

The b index of any element c

will be denoted by b ⍳ c;

if necessary, the index origin in use will be indicated

by a subscript appended to the operator ⍳.

Thus, if b = (a, p, e),

b ⍳0 p = 1, and

b ⍳1 p = 2.

The b index of a vector c is defined as follows:

k ← b ⍳ c ↔ k i = b ⍳ c i.

The extension to matrices may be either row by row or (as indicated by a doubled operator symbol ⍳⍳) column by column, as follows:

| J ← B ⍳ C | ↔ | J i ← B i ⍳ C i, | |

| K ← B ⍳⍳ C | ↔ | Kj ← Bj ⍳ Cj. |

Use of the ranking operator in a matrix product requires no secondary scan and is therefore indicated by a superior null symbol. Moreover, since the result must be limited to a two-dimensional array (matrix), either the pre- or post-operand is required to be a vector. Hence

J ← B  c c |

↔ | J i ← B i ⍳ c i, | |

K ← b  C C |

↔ | Kj ← bj ⍳ Cj. |

The first of these ranks the components of c with respect to each of a set of vectors B1, B2, …, Bμ, whereas the second ranks each of the vectors C1, C2, …, Cν with respect to the fixed vector b.

The use of the ranking operation can be illustrated as follows. Consider the vector b = (a, b, c, d, e) and the set of all 35 three-letter sequences (vectors) formed from its components. If the set is ordered lexically, and if x is the jth member of the set (counting from zero), then

j = (ν(b)∊) ⊥ (b ⍳0 x).

For example, if x = (c, a, b), then

(b ⍳0 x) =

(2, 0, 1), and j = 51.

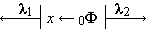

1.17 Mapping and permutation

Reordering operations

The selection operations employed thus far do not permit convenient reorderings of the components. This is provided by the mapping operation defined as follows:[e]

c ← a k ↔ c i = a ki

For example, if a = (a, b, …, z) and k = (6, 5, 4), then c = (f, e, d).

The foregoing definition is meaningful only if the components of k each lie in the range of the indices of a, and it will be extended by defining a j as the null element ∘ if j does not belong to the index set of a. Formally,

| c ← a m ↔ c i = |  |

a mi | if m i ε ⍳1(ν(a)) | |||

| ∘ | if m i  ⍳1(ν(a)).

⍳1(ν(a)). |

The ability to specify an arbitrary index origin for the vector a being mapped is provided by the following alternative notation for mapping:

| c ← m ∫j a ↔ c i = |  |

a mi | if m i ε ⍳j(ν(a)) | |||

| ∘ | if m i  ⍳j(ν(a)),

⍳j(ν(a)), |

where j-origin indexing is assumed for the vector a. For example, if a is the alphabet and m = (5, ∘, ∘, 4, 27, ∘, 3), then c = m ∫0 a = (f, ∘, ∘, e, ∘, ∘, d), and (c ≠ ∘∊)/c = (f, e, d). Moreover, m ∫2 a = (d, ∘, ∘, c, z, ∘, b). Elision of j is permitted.

If a ⊆ b,

and m = b

⍳j a,

then clearly m ∫j

b = a.

If a  b,

then m ∫j b

contains (in addition to certain nulls)

those components common to b and a,

arranged in the order in which they occur in a.

In other words,

b,

then m ∫j b

contains (in addition to certain nulls)

those components common to b and a,

arranged in the order in which they occur in a.

In other words,

(m ≠ ∘∊)/(m ∫j b) = a ∩ b.

Consequently, if p, q, …, t are vectors, each contained in b, then each can be represented jointly by the vector b and a mapping vector. If, for example, b is a glossary and p, q, etc. are texts, the total storage required for b and the mapping vectors might be considerably less than for the entire set of texts.

Mapping may be shown to be associative, that is, m1 ∫i (m2 ∫j a) = (m1 ∫i m2) ∫j a. Mapping is not, in general, commutative.

Mapping is extended to matrices as follows:

| A ← M ∫h B | ↔ | Ai = M i ∫h Bi, | |

| C ← M ∫∫h B | ↔ | Cj = Mj ∫h Bj. |

Row and column mappings are associative.

A row mapping 1M

and a column mapping 2M

do not, in general, commute, but do if all rows

of 1M agree

(that is, 1M = ∊

p),

and if all columns of 2M agree

(that is, 2M = q

p),

and if all columns of 2M agree

(that is, 2M = q

∊).

The generalized matrix product is defined for the cases

M

∊).

The generalized matrix product is defined for the cases

M  A, and

M

A, and

M  a.

a.

The alternative notation (that is, c = am), which does not incorporate specification of the index origin, is particularly convenient for matrices and is extended as follows:

| A ← Bm | ↔ | Ai = Bmi, | A ← Bm | ↔ | Ai = Bmi. |

Permutations

A vector k of dimension n is called a j-origin permutation vector if k ≡ ⍳j(n). A permutation vector used to map any set of the same dimension produces a reordering of the set without either repetition or suppression of elements, that is, k ∫j a ≡ a for any set a of dimension ν(k). For example, if a = (f, 4, *, 6, z), and k = (4, 2, 5, 1, 3), then k ∫1 a = (6, 4, z, f, *).

If p is an h-origin permutation vector and q is any j-origin permutation vector of the same dimension, then q ∫j p is an h-origin permutation vector.

Since

⍳j(ν(a)) ∫j a = a,

the interval vector ⍳j(n) will also be called the j-origin identity permutation vector. If p and q are two j-origin permutation vectors of the same dimension n and if q ∫j p = ⍳j(n), then p ∫j q = ⍳j(n) also and p and q are said to be inverse permutations. If p is any j-origin permutation vector, then q = p ⍳j ⍳j is inverse to p.

The rotation operation k ↑ x is a special case of permutation.

Function mapping

A function f which defines for each element bi of a set b a unique correspondent ak in a set a is called a mapping from b to a. If f(bi) = ak, the element bi is said to map into the element ak. If the elements f(bi) exhaust the set a, the function f is set to map b onto a. If b maps onto a and the elements f(bi) are all distinct, the mapping is said to be one-to-one or biunique. In this case, ν(a) = ν(b), and there exists an inverse mapping from a to b with the same correspondences.

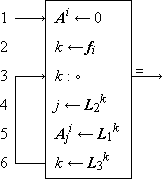

A program for performing the mapping f from b to a must therefore determine for any given element b ε b, the correspondent a ε a, such that a = f(b). Because of the convenience of operating upon integers (e.g., upon register addresses or other numeric symbols) in the automatic execution of programs, the mapping is frequently performed in three successive phases, determining in turn the following quantities:

| 1. | the index i = b ⍳ b, | |

| 2. | the index k such that ak = f(bi), | |

| 3. | the element ak. |

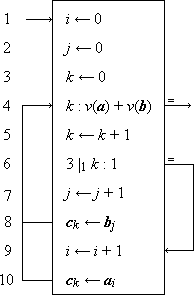

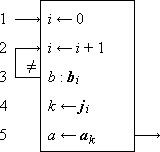

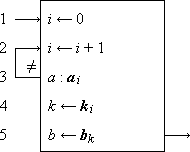

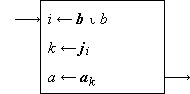

The three phases are shown in detail in Program 1.12a. The ranking is performed (steps 1-3) by scanning the set b in order and comparing each element with the argument b. The second phase is a permutation of the integers 1, 2, …, ν(b), which may be described by a permutation vector j, such that ji = k. The selection of ji (step 4) then defines k, which, in turn, determines the selection of ak on step 5.

|

Example 1.2. If

are, respectively, a set of English words and a set of German correspondents (both in alphabetical order), and if the function required is the mapping of a given English word b into its German equivalent a according to the dictionary correspondences:

then j = (1, 3, 5, 2, 4). If b = “night”, then i = 5, ji = 4, and a = a4 = Nacht. |