The Evolution of APL

Adin D. Falkoff

Kenneth E. Iverson

IBM Corporation Research Division

This paper is a discussion of the evolution of the APL language, and it treats implementations and applications only to the extent that they appear to have exercised a major influence on that evolution. Other sources of historical information are cited in References 1-3; in particular, The Design of APL [1] provides supplementary detail on the reasons behind many of the design decisions made in the development of the language. Readers requiring background on the current definition of the language should consult APL Language [4].

Although we have attempted to confirm our recollections by reference to written documents and to the memories of our colleagues, this remains a personal view which the reader should perhaps supplement by consulting the references provided. In particular, much information about individual contributions will be found in the Appendix to The Design of APL [1], and in the Acknowledgments in A Programming Language [10] and in APL\360 User’s Manual [23]. Because Reference 23 may no longer be readily available, the acknowledgments from it are reprinted in Appendix A.

McDonnell’s recent paper on the development of the notation for the circular functions [5] shows that the detailed evolution of any one facet of the language can be both interesting and illuminating. Too much detail in the present paper would, however, tend to obscure the main points, and we have therefore limited ourselves to one such example. We can only hope that other contributors will publish their views on the detailed developments of other facets of the language, and on the development of various applications of it.

The development of the language was first begun by Iverson as a tool for describing and analyzing various topics in data processing, for use in teaching classes, and in writing a book, Automatic Data Processing [6], undertaken together with Frederick P. Brooks, Jr., then a graduate student at Harvard. Because the work began as incidental to other work, it is difficult to pinpoint the beginning, but it was probably early 1956; the first explicit use of the language to provide communication between the designers and programmers of a complex system occurred during a leave from Harvard spent with the management consulting firm of McKinsey and Company in 1957. Even after others were drawn into the development of the language, this development remained largely incidental to the work in which it was used. For example, Falkoff was first attracted to it (shortly after Iverson joined IBM in 1960) by its use as a tool in his work in parallel search memories [7], and in 1964 we began to plan an implementation of the language to enhance its utility as a design tool, work which came to fruition when we were joined by Lawrence M. Breed in 1965.

The most important influences in the early phase appear to be Iverson’s background in mathematics, his thesis work in the machine solutions of linear differential equations [8] for an economic input-output model proposed by Professor Wassily Leontief (who, with Professor Howard Aiken, served as thesis adviser), and Professor Aiken’s interest in the newly developing field of commercial applications of computers. Falkoff brought to the work a background in engineering and technical development, with experience in a number of disciplines, which had left him convinced of the overriding importance of simplicity, particularly in a field as subject to complication as data processing.

Although the evolution has been continuous,

it will be helpful to distinguish four phases

according to the major use or preoccupation of the period:

academic use (to 1960), machine description (1961-1963),

implementation (1964-1968), and systems (after 1968).

1. Academic Use

The machine programming required in Iverson’s thesis work was directed at the development of a set of subroutines designed to permit convenient experimentation with a variety of mathematical methods. This implementation experience led to an emphasis on implementable language constructs, and to an understanding of the role of the representation of data.

The mathematical background shows itself in a variety of ways, notably:

| 1. | In the use of functions with explicit arguments and explicit results; even the relations (< ≤ = ≥ > ≠) are treated as such functions. | |

| 2. | In the use of logical functions and logical variables. For example, the compression function (denoted by /) uses as one argument a logical vector which is, in effect, the characteristic vector of the subset selected by compression. | |

| 3. | In the use of concepts and terminology from tensor analysis, as in inner product and outer product and in the use of rank for the “dimensionality” of an array, and in the treatment of a scalar as an array of rank zero. | |

| 4. | In the emphasis on generality. For example, the generalizations of summation (by f/), of inner product (by f.g), and of outer product (by ∘.f) extended the utility of these functions far beyond their original area of application. | |

| 5. | In the emphasis on identities (already evident in [9]) which makes the language more useful for analytic purposes, and which leads to a uniform treatment of special cases as, for example, the definition of the reduction of an empty vector, first given in A Programming Language [10]. |

In 1954 Harvard University published an announcement [11] of a new graduate program in Automatic Data Processing organized by Professor Aiken. (The program was also reported in a conference on computer education [12]). Iverson was one of the new faculty appointed to prosecute the program; working under the guidance of Professor Aiken in the development of new courses provided a stimulus to his interest in developing notation, and the diversity of interests embraced by the program promoted a broad view of applications.

The state of the language at the end of the academic period

is best represented by the presentation in

A Programming Language

[10],

submitted for publication in early 1961.

The evolution in the latter part of the period

is best seen by comparing

References 9

and

10.

This comparison shows that reduction and

inner and outer product were all introduced in that period,

although not then recognized as a class later called operators.

It also shows that specification was originally

(in References 9)

denoted by placing the specified name at the right,

as in p+q→z .

The arguments (due in part to F.P. Brooks, Jr.)

which led to the present form (z←p+q)

were that it better conformed to the mathematical

form z=p+q ,

and that in reading a program,

any backward reference to determine

how a given variable was specified would be facilitated

if the specified variables were aligned at the left margin.

What this comparison does not show is

the removal of a number of special comparison functions

(such as the comparison of a vector with each row of a matrix)

which were seen to be unnecessary

when the power of the inner product began to be appreciated,

as in the expression m∧.=v .

This removal provides one example of the simplification

of the language produced by generalizations.

2. Machine Description

The machine description phase was marked by the complete or partial description of a number of computer systems. The first use of the language to describe a complete computing system was begun in early 1962 when Falkoff discussed with Dr. W.C. Carter his work in the standardization of the instruction set for the machines that were to become the IBM System/360 family. Falkoff agreed to undertake a formal description of the machine language, largely as a vehicle for demonstrating how parallel processes could be rigorously represented. He was later joined in this work by Iverson when he returned from a short leave at Harvard, and still later by E.H. Sussenguth. This work was published as “A Formal Description of System/360” [13].This phase was also marked by a consolidation and regularization of many aspects which had little to do with machine description. For example, the cumbersome definition of maximum and minimum (denoted in Reference 10 by u⌈v and u⌊v and equivalent to what would now be written as ⌈/u/v and ⌊/u/v) was replaced, at the suggestion of Herbert Hellerman, by the present simple scalar functions. This simplification was deemed practical because of our increased understanding of the potential of reduction and inner and outer product.

The best picture of the evolution in this period is given by a comparison of A Programming Language [10] on the one hand, and “A Formal Description of System/360” [13] and “Formalism in Programming Languages” [14] on the other. Using explicit page references to Reference 10, we will now give some further examples of regularization during this period:| 1. | The elimination of embracing symbols (such as |x| for absolute value, ⌊x⌋ for floor, and ⌈x⌉ for ceiling) and replacement by the leading symbol only, thus unifying the syntax for monadic functions. | |

| 2. | The conscious use of a single function symbol to represent both a monadic and dyadic function (still referred to in Reference 10 as unary and binary). | |

| 3. | The adoption of multicharacter names which, because of the failure (p. 11) to insist on no elision of the times sign, had been permitted (p. 10) only with a special indicator. | |

| 4. | The rigorous adoption of a right-to-left order of execution which, although stated (p. 8) had been violated by the unconscious application of the familiar precedence rules of mathematics. Reasons for this choice are presented in Elementary Functions [15], in Berry’s APL\360 Primer [16], and in The Design of APL [1]. | |

| 5. | The concomitant definition of reduction based on a right-to-left order of execution as opposed to the opposite convention defined on p. 16. | |

| 6. | Elimination of the requirement for parentheses surrounding an expression involving a relation (p. 11). An example of the use without parentheses occurs near the bottom of p. 241 of Reference 13. | |

| 7. | The elimination of implicit specification of a variable (that is, the specification of some function of it, as in the expression ⊥s←2 on p. 81), and its replacement by an explicit inverse function (⊤ in the cited example). |

Perhaps the most important developments of this period were in the use of a collection of concurrent autonomous programs to describe a system, and the formalization of shared variables as the means of communication among the programs. Again, comparisons may be made between the system of programs of Reference 13, and the more informal use of concurrent programs introduced on p. 88 of Reference 10.

It is interesting to note that the need

for a random function (denoted by the question mark)

was first felt in describing the operation

of the computer itself.

The architects of the IBM System/360

wished to leave to the discretion of the designers

of the individual machines of the 360 family

the decision as to what was to be found

in certain registers after the occurrence of certain errors,

and this was done by stating that the result was to be random.

Recognizing more general use for the function

than the generation of random logical vectors,

we subsequently defined the monadic question mark function

as a scalar function whose argument specified

the population from which the random elements were to be chosen.

3. Implementation

In 1964 a number of factors conspired to turn our attention seriously to the problem of implementation. One was the fact that the language was by now sufficiently well-defined to give us some confidence in its suitability for implementation. The second was the interest of Mr. John L. Lawrence, who, after managing the publication of our description of System/360, asked for our consultation in utilizing the language as a tool in his new responsibility (with Science Research Associates) for developing the use of computers in education. We quickly agreed with Mr. Lawrence on the necessity for a machine implementation in this work. The third was the interest of our then manager, Dr. Herbert Hellerman, who, after initiating some implementation work which did not see completion, himself undertook an implementation of an array-based language which he reported in the Communications of the ACM [17]. Although this work was limited in certain important respects, it did prove useful as a teaching tool and tended to confirm the feasibility of implementation.

Our first step was to define a character set for APL. Influenced by Dr. Hellerman’s interest in time-sharing systems, we decided to base the design on an 88-character set for the IBM 1050 terminal, which utilized the easily changed Selectric® typing element. The design of this character set exercised a surprising degree of influence on the development of the language.

As a practical matter it was clear that we would have to accept a linearization of the language (with no superscripts or subscripts) as well as a strict limit on the size of the primary character set. Although we expected these limitations to have a deleterious effect, and at first found unpleasant some of the linearity forced upon us, we now feel that the changes were beneficial, and that many led to important generalizations. For example:

| 1. | On linearizing indexing we realized that the sub- and superscript form had inhibited the use of arrays of rank greater than 2, and had also inhibited the use of several levels of indexing; both inhibitions were relieved by the linear form a[i;j;k] . | |

| 2. |

The linearization of the inner and outer product notation

(from m n

and m n

and m n

to m+.×n and m∘.×n )

led eventually to the recognition of the operator

(which was now represented by an explicit symbol, the period)

as a separate and important component of the language. n

to m+.×n and m∘.×n )

led eventually to the recognition of the operator

(which was now represented by an explicit symbol, the period)

as a separate and important component of the language.

| |

| 3. | Linearization led to a regularization of many functions of two arguments (such as n⍺j for ⍺j(n) and a*b for ab) and to the redefinition of certain functions of two or three arguments so as to eliminate one of the arguments. For example, ⍳j(n) was replaced by ⍳n , with the simple expression j+⍳n replacing the original definition. Moreover, the simple form ⍳n led to the recognition that j≥⍳n could replace n⍺j (for j a scalar) and that j∘.≥⍳n could generalize n⍺j in a useful manner; as a result the functions ⍺ and ⍵ were eventually withdrawn. | |

| 4. | The limitation of the character set led to a more systematic exploitation of the notion of ambiguous valence, the representation of both a monadic and a dyadic function by the same symbol. | |

| 5. | The limitation of the character set led to the replacement of the two functions for the number of rows and the number of columns of an array, by the single function (denoted by ⍴) which gave the dimension vector of the array. This provided the necessary extension to arrays of arbitrary rank, and led to the simple expression ⍴⍴a for the rank of a . The resulting notion of the dimension vector also led to the definition of the dyadic reshape function d⍴x . | |

| 6. | The limitation to 88 primary characters led to the important notion of composite characters formed by striking one of the basic characters over another. This scheme has provided a supply of easily read and easily written symbols which were needed as the language developed further. For example, the quad, overbar, and circle were included not for specific purposes but because they could be used to overstrike many characters. The overbar by itself also proved valuable for the representation of negative numbers, and the circle proved convenient in carrying out the idea, proposed by E.E. McDonnell, of representing the entire family of (monadic) circular functions by a single dyadic function. | |

| 7. |

The use of multiple fonts had to be reexamined, and this led to the realization that certain functions were defined not in terms of the value of the argument alone, but also in terms of the form of the name of the argument. Such dependence on the forms of names was removed. We did, however, include characters which could print above and below alphabetics to provide for possible font distinctions. The original typing element included both the present flat underscore, and a saw-tooth one (the pralltriller as shown, for example, in Webster’s Second), and a hyphen. In practice, we found the two underscores somewhat difficult to distinguish, and the hyphen very difficult to distinguish from the minus, from which it differed only in length. We therefore made the rather costly change of two characters, substituting the present delta and del (inverted delta) for the pralltriller and the hyphen. |

In the placement of the character set on the keyboard we were subject to a number of constraints imposed by the two forms of the IBM 2741 terminal (which differed in the encoding from keyboard-position to element-position), but were able to devise a grouping of symbols which most users find easy to learn. One pleasant surprise has been the discovery that numbers of people who do not use APL have adopted the type element for use in mathematical typing. The first publication of the character set appears to be in Elementary Functions [15].

Implementation led to a new class of questions, including the formal definition of functions, the localization and scope of names, and the use of tolerances in comparisons and in printing output. It also led to systems questions concerning the environment and its management, including the matter of libraries and certain parameters such as index origin, printing precision, and printing width.

Two early decisions set the tone of the implementation work: (1) The implementation was to be experimental, with primary emphasis on flexibility to permit experimentation with language concepts, and with questions of execution efficiency subordinated, and (2) the language was to be compromised as little as possible by machine considerations.

These considerations led Breed and P.S. Abrams (both of whom had been attracted to our work by Reference 13) to propose and build an interpretive implementation in the summer of 1965. This was a batch system with punched-card input, using a multicharacter encoding of the primitive function symbols. It ran on the IBM 7090 computer, and we were later able to experiment with it interactively, using the typeball previously designed, by placing the interpreter under an experimental time-sharing monitor (TSM) available on a machine in a nearby IBM facility.

TSM was available to us for only a very short time, and in early 1966 we began to consider an implementation on System/360, work that started in earnest in July and culminated in a running system in the fall. The fact that this interpretive and experimental implementation also proved to be remarkably practical and efficient is a tribute to the skill of the implementers, recognized in 1973 by the award to the principals (L.M. Breed, R.H. Lathwell, and R.D. Moore) of ACM’s Grace Murray Hopper Award. The fact that many APL implementations continue to be largely interpretive may be attributed to the array character of the language which makes possible efficient interpretive execution.

We chose to treat the occurrence of a statement as an order to evaluate it, and rejected the notion of an explicit function to indicate evaluation. In order to avoid the introduction of “names” as a distinct object class, we also rejected the notion of “call by name”. The constraints imposed by this decision were eventually removed in a simple and general way by the introduction of the execute function, which served to execute its character string argument as an APL expression. The evolution of these notions is discussed at length in the section on “Execute and Format” in The Design of APL [1].

In earlier discussions with a number of colleagues, the introduction of declarations into the language was urged upon us as a requisite for implementation. We resisted this on the general basis of simplicity, but also on the basis that information in declarations would be redundant, or perhaps conflicting, in a language in which arrays are primitive. The choice of an interpretive implementation made the exclusion of declarations feasible, and this, coupled with the determination to minimize the influence of machine considerations such as the internal representations of numbers on the design of the language, led to an early decision to exclude them.

In providing a mechanism by which a user could define a new function, we wished to provide six forms in all: functions with 0, 1, or 2 explicit arguments, and functions with 0 or 1 explicit results. This led to the adoption of a header for the function definition which was, in effect, a paradigm for the way in which a function was used. For example, a function f of two arguments having an explicit result would typically be used in an expression such as z←a f b , and this was the form used for the header.

The names for arguments and results in the header were of course made local to the function definition, but at the outset no thought was given to the localization of other names. Fortunately, the design of the interpreter made it relatively easy to localize the names by adding them to the header (separated by semicolons), and this was soon done. Names so localized were strictly local to the defined function, and their scope did not extend to any other functions used within it. It was not until the spring of 1968 when Breed returned from a talk by Professor Alan Perlis on what he called “dynamic localization” that the present scheme was adopted, in which name scopes extend to functions called within a function.

We recognized that the finite limits on the representation of numbers imposed by an implementation would raise problems which might require some compromise in the definition of the language, and we tried to keep these compromises to a minimum. For example, it was clear that we would have to provide both integer and floating point representations of numbers and, because we anticipated use of the system in logical design, we wished to provide an efficient (one bit per element) representation of logical arrays as well. However, at the cost of considerable effort and some loss of efficiency, both well worthwhile, the transitions between representations were made to be imperceptible to the user, except for secondary effects such as storage requirements.

Problems such as overflow (i.e., a result outside the range of the representations available) were treated as domain errors, the term domain being understood as the domain of the machine function provided, rather than as the domain of the abstract mathematical function on which it was based.

One difficulty we had not anticipated was the provision of sensible results for the comparison of quantities represented to a limited precision. For example, if x and y were specified by y←2÷3 and x←3×y , then we wished to have the comparison 2=x yield 1 (representing true) even though the representation of the quantity x would differ slightly from 2.

This was solved by introducing a comparison tolerance (christened fuzz by L.M. Breed, who knew of its use in the Bell Interpreter [18]) which was multiplied by the larger in magnitude of the arguments to give a tolerance to be applied in the comparison. This tolerance was at first fixed (at 1e¯13) and was later made specifiable by the user. The matter has proven more difficult than we first expected, and discussion of it still continues [19, 20].

A related, but less serious, question was what to do with the rational root of a negative number, a question which arose because the exponent (as in the expression ¯8*2÷3) would normally be presented as an approximation to a rational. Since we wished to make the mathematics behave “as you thought it did in high school” we wished to treat such cases properly at least for rationals with denominators of reasonable size. This was achieved by determining the result sign by a continued fraction expansion of the right argument (but only for negative left arguments) and worked for all denominators up to 80 and “most” above.

Most of the mathematical functions required were provided by programs taken from the work of the late Hirondo Kuki in the FORTRAN IV Subroutine Library. Certain functions (such as the inverse hyperbolics) were, however, not available and were developed, during the summers of 1967 and 1968, by K.M. Brown, then on the faculty of Cornell University.

The fundamental decision concerning the systems environment was the adoption of the concept of a workspace. As defined in “The APL\360 Terminal System” [21]:

APL\360 is built around the idea of a workspace, analogous to a notebook, in which one keeps work in progress. The workspace holds both defined functions and variables (data), and it may be stored into and retrieved from a library holding many such workspaces. When retrieved from a library by an appropriate command from a terminal, a copy of the stored workspace becomes active at that terminal, and the functions defined in it, together with all the APL primitives, become available to the user.

The three commands required for managing a library are “save”, “load”, and “drop”, which respectively store a copy of an active workspace into a library, make a copy of a stored workspace active, and destroy the library copy of a workspace. Each user of the system has a private library into which only he can store. However, he may load a workspace from any of a number of common libraries, or if he is privy to the necessary information, from another user’s private library. Functions or variables in different workspaces can be combined, either item by item or all at once, by a fourth command, called “copy”. By means of three cataloging commands, a user may get the names of workspaces in his own or a common library, or get a listing of functions or variables in his active workspace.

The language used to control the system functions of loading and storing workspaces was not APL, but comprised a set of system commands. The first character of each system command is a right parenthesis, which cannot occur at the left of a valid APL expression, and therefore acts as an “escape character”, freeing the syntax of what follows. System commands were used for other aspects such as sign-on and sign-off, messages to other users, and for the setting and sensing of various system parameters such as the index origin, the printing precision, the print width, and the random link used in generating the pseudo-random sequence for the random function.

When it first became necessary to name the implementation we chose the acronym formed from the book title A Programming Language [10] and, to allow a clear distinction between the language and any particular implementation of it, initiated the use of the machine name as part of the name of the implementation (as in APL\1130 and APL\360). Within the design group we had until that time simply referred to “the language”.

A brief working manual of the APL\360 system

was first published in November 1966

[22],

and a full manual appeared in 1968

[23].

The initial implementation (in FORTRAN on

an IBM 7090) was discussed by Abrams

[24],

and the time-shared implementation on System/360

was discussed by Breed and Lathwell

[25].

4. Systems

Use of the APL system by others in IBM began long before it had been completed to the point described in APL\360 User’s Manual [23]. We quickly learned the difficulties associated with changing the specifications of a system already in use, and the impact of changes on established users and programs. As a result we learned to appreciate the importance of the relatively long period of development of the language which preceded the implementation; early implementation of languages tends to stifle radical change, limiting further development to the addition of features and frills.

On the other hand, we also learned the advantages of a running model of the language in exposing anomalies and, in particular, the advantage of input from a large population of users concerned with a broad range of applications. This use quickly exposed the major deficiencies of the system.

Some of these deficiencies were rectified by the generalization of certain functions and the addition of others in a process of gradual evolution. Examples include the extension of the catenation function to apply to arrays other than vectors and to permit lamination, and the addition of a generalized matrix inverse function discussed by M.A. Jenkins [26].

Other deficiencies were of a systems nature, concerning the need to communicate between concurrent APL programs (as in our description of System/360), to communicate with the APL system itself within APL rather than by the ad hoc device of system commands, to communicate with alien systems and devices (as in the use of file devices), and the need to define functions within the language in terms of their representation by APL arrays. These matters required more fundamental innovations and led to what we have called the system phase.

The most pressing practical need for the application of APL systems to commercial data processing was the provision of file facilities. One of the first commercial systems to provide this was the File Subsystem reported by Sharp [27] in 1970, and defined in a SHARE presentation by L.M. Breed [28], and in a manual published by Scientific Time Sharing Corporation [29]. As its name implies, it was not an integral part of the language but was, like the system commands, a practical ad hoc solution to a pressing problem.

In 1970 R.H. Lathwell proposed what was to become the basis of a general solution to many systems problems of APL\360, a shared variable processor [30] which implemented the shared variable scheme of communication among processors. This work culminated in the APLSV System [31] which became generally available in 1973.

Falkoff’s “Some Implications of Shared Variables” [32] presents the essential notion of the shared variable system as follows:

A user of early APL systems essentially had what appeared to be an “APL machine” at his disposal, but one which lacked access to the rest of the world. In more recent systems, such as APLSV and others, this isolation is overcome and communication with other users and the host system is provided for by shared variables.

Two classes of shared variables are available in these systems. First, there is a general shared variable facility with which a user may establish arbitrary, temporary, interfaces with other users or with auxiliary processors. Through the latter, communication may be had with other elements of the host system, such as its file subsystem, or with other systems altogether. Second, there is a set of system variables which define parts of the permanent interface between an APL program and the underlying processor. These are used for interrogating and controlling the computing environment, such as the origin for array indexing or the action to be taken upon the occurrence of certain exceptional conditions.

5. A Detailed Example

At the risk of placing undue emphasis on one facet of the language, we will now examine in detail the evolution of the treatment of numeric constants, in order to illustrate how substantial changes were commonly arrived at by a sequence of small steps.

Any numeric constant, including a constant vector, can be written as an expression involving APL primitive functions applied to decimal numbers as, for example, in 3.14×10*-5 and -2.718 and (3.14×10*-5),(-2.718),5 . At the outset we permitted only nonnegative decimal constants of the form 2.718 ; all other values had to be expressed as compound statements.

Use of the monadic negation function in producing negative values in vectors was particularly cumbersome, as in (-4),3,(-5),-7 . We soon realized that the adoption of a specific “negative” symbol would solve the problem, and familiarity with Beberman’s work [33] led us to the adoption of his “high minus” which we had, rather fortuitously, included in our character set. The constant vector used above could now be written as ¯4,3,¯5,¯7 .

Solution of the problem of negative numbers emphasized the remaining awkwardness of factors of the form 10*n . At a meeting of the principals in Chicago, which included Donald Mitchell and Peter Calingaert of Science Research Associates, it was realized that the introduction of a scaled form of constant in the manner used in FORTRAN would not complicate the syntax, and this was soon adopted.

These refinements left one function in the writing of any vector constant, namely, catenation. The straightforward execution of an expression for a constant vector of n elements involved n-1 catenations of scalars with vectors of increasing length, the handling of roughly .5×n×n+1 elements in all. To avoid gross inefficiencies in the input of a constant vector from the keyboard, catenation was therefore given special treatment in the original implementation.

This system had been in use for perhaps

six months when it occurred to Falkoff

that since commas were not required

in the normal representation of a matrix,

vector constants might do without them as well.

This seemed outrageously simple,

and we looked for flaws.

Finding none we adopted and implemented the idea immediately,

but it took some time to overcome

the habit of writing expressions such

as (3,3)⍴x instead of 3 3⍴x .

6. Conclusions

Nearly all programming languages are rooted in mathematical notation, employing such fundamental notions as functions, variables, and the decimal (or other radix) representation of numbers, and a view of programming languages as part of the longer-range development of mathematical notation can serve to illuminate their development.

Before the advent of the general-purpose computer, mathematical notation had, in a long and painful evolution well-described in Cajori’s history of mathematical notations [34], embraced a number of important notions:

| 1. | The notion of assigning an alphabetic name to a variable or unknown quantity (Cajori, Sections 339-341). | |

| 2. | The notion of a function which applies to an argument or arguments to produce an explicit result which can itself serve as argument to another function, and the associated adoption of specific symbols (such as + and ×) to denote the more common functions (Cajori, Sections 200-233). | |

| 3. | Aggregation or grouping symbols (such as the parentheses) which make possible the use of composite expressions with an unambiguous specification of the order in which the component functions are to be executed (Cajori, Sections 342-355). | |

| 4. | Simple uniform representations for numeric quantities (Cajori, Sections 276-289). | |

| 5. | The treatment of quantities without concern for the particular representation used. | |

| 6. | The notion of treating vectors, matrices, and higher-dimensional arrays as entities, which had by this time become fairly widespread in mathematics, physics, and engineering. |

With the first computer languages (machine languages) all of these notions were, for good practical reasons, dropped; variable names were represented by “register numbers”, application of a function (as in a+b) was necessarily broken into a sequence of operations (such as “Load register 801 into the Addend register, Load register 802 into the Augend register, etc.”), grouping of operations was therefore nonexistent, the various functions provided were represented by numbers rather than by familiar mathematical symbols, results depended sharply on the particular representation used in the machine, and the use of arrays, as such, disappeared.

Some of these limitations were soon removed in early “automatic programming” languages, and languages such as FORTRAN introduced a limited treatment of arrays, but many of the original limitations remain. For example, in FORTRAN and related languages the size of an array is not a language concept, the asterisk is used instead of any of the familiar mathematical symbols for multiplication, the power function is represented by two occurrences of this symbol rather than by a distinct symbol, and concern with representation still survives in declarations.

APL has, in its development, remained much closer to mathematical notation, retaining (or selecting one of) established symbols where possible, and employing mathematical terminology. Principles of simplicity and uniformity have, however, been given precedence, and these have led to certain departures from conventional mathematical notation as, for example, the adoption of a single form (analogous to 3+4) for the dyadic functions, a single form (analogous to -4) for monadic functions, and the adoption of a uniform rule for the application of all scalar functions to arrays. This relationship to mathematical notation has been discussed in The Design of APL [1] and in “Algebra as a Language” which occurs as Appendix A in Algebra: An Algorithmic Treatment [35].

The close ties with mathematical notation are evident in such things as the reduction operator (a generalization of sigma notation), the inner product (a generalization of matrix product), and the outer product (a generalization of the outer product used in tensor analysis). In other aspects the relation to mathematical notation is closer than might appear. For example, the order of execution of the conventional expression f g h (x) can be expressed by saying that the right argument of each function is the value of the entire expression to its right; this rule, extended to dyadic as well as monadic functions, is the rule used in APL. Moreover, the term operator is used in the same sense as in “derivative operator” or “convolution operator” in mathematics, and to avoid conflict it is not used as a synonym for function.

As a corollary we may remark that the other major programming languages, although known to the designers of APL, exerted little or no influence, because of their radical departures from the line of development of mathematical notation which APL continued. A concise view of the current use of the language, together with comments on matters such as writing style, may be found in Falkoff’s review of the 1975 and 1976 International APL Congresses [36].

Although this is not the place to discuss the future, it should be remarked that the evolution of APL is far from finished. In particular, there remain large areas of mathematics, such as set theory and vector calculus, which can clearly be incorporated in APL through the introduction of further operators.

There are also a number of important features which are already in the abstract language, in the sense that their incorporation requires little or no new definition, but are as yet absent from most implementations. Examples include complex numbers, the possibility of defining functions of ambiguous valence (already incorporated in at least two systems [37, 38]), the use of user defined functions in conjunction with operators, and the use of selection functions other than indexing to the left of the assignment arrow.

We conclude with some general comments, taken from The Design of APL [1], on principles which guided, and circumstances which shaped, the evolution of APL:

The actual operative principles guiding the design of any complex system must be few and broad. In the present instance we believe these principles to be simplicity and practicality. Simplicity enters in four guises: uniformity (rules are few and simple), generality (a small number of general functions provide as special cases a host of more specialized functions), familiarity (familiar symbols and usages are adopted whenever possible), and brevity (economy of expression is sought). Practicality is manifested in two respects: concern with actual application of the language, and concern with the practical limitations imposed by existing equipment.

We believe that the design of APL was also affected in important respects by a number of procedures and circumstances. Firstly, from its inception APL has been developed by using it in a succession of areas. This emphasis on application clearly favors practicality and simplicity. The treatment of many different areas fostered generalization: for example, the general inner product was developed in attempting to obtain the advantages of ordinary matrix algebra in the treatment of symbolic logic.

Secondly, the lack of any machine realization of the language during the first seven or eight years of its development allowed the designers the freedom to make radical changes, a freedom not normally enjoyed by designers who must observe the needs of a large working population dependent on the language for their daily computing needs. This circumstance was due more to the dearth of interest in the language than to foresight.

Thirdly, at every stage the design of the language was controlled by a small group of not more than five people. In particular, the men who designed (and coded) the implementation were part of the language design group, and all members of the design group were involved in broad decisions affecting the implementation. On the other hand, many ideas were received and accepted from people outside the design group, particularly from active users of some implementation of APL.

Finally, design decisions were made by Quaker consensus; controversial innovations were deferred until they could be revised or reevaluated so as to obtain unanimous agreement. Unanimity was not achieved without cost in time and effort, and many divergent paths were explored and assessed. For example, many different notations for the circular and hyperbolic functions were entertained over a period of more than a year before the present scheme was proposed, whereupon it was quickly adopted. As the language grows, more effort is needed to explore the ramifications of any major innovation. Moreover, greater care is needed in introducing new facilities, to avoid the possibility of later retraction that would inconvenience thousands of users.

Acknowledgments

For critical comments arising from their reading

of this paper, we are indebted to a number of our colleagues

who were there when it happened,

particularly P.S. Abrams of Scientific Time Sharing Corporation,

R.H. Lathwell and R.D. Moore of I.P. Sharp Associates,

and L.M. Breed and E.E. McDonnell of IBM Corporation.

References

| 1. | Falkoff, A.D., and K.E. Iverson, The Design of APL. IBM Journal of Research and Development, Vol. 17, No., 4, July 1973, pages 324-334. | |

| 2. | The Story of APL, Computing Report in Science and Engineering, IBM Corp., Vol. 6, No. 2, April 1970, pages 14-18. | |

| 3. | Origin of APL, a videotape prepared by John Clark for the Fourth APL Conference, 1974, with the participation of P.S. Abrams, L.M. Breed, A.D. Falkoff, K.E. Iverson, and R.D. Moore. Available from Orange Coast Community College, Costa Mesa, California. | |

| 4. | Falkoff, A.D., and K.E. Iverson, APL Language, Form No. GC26-3847, IBM Corp., White Plains, New York, 1975. | |

| 5. | McDonnell, E.E., The Story of o , APL Quote-Quad. 8(2): 48-45 (1977) Dec. ACM, SIGPLAN Technical Committee on APL (STAPL). | |

| 6. | Brooks, F.P., and K.E. Iverson, Automatic Data Processing. New York: Wiley, 1973. | |

| 7. | Falkoff, A.D., Algorithms for Parallel Search Memories. Journal of the ACM, 9: 488-511 (1962). | |

| 8. | Iverson, K.E., Machine Solutions of Linear Differential Equations: Applications to a Dynamic Economic Model, Ph.D. Thesis, Harvard University, 1954. | |

| 9. | Iverson, K.E. The Description of Finite Sequential Processes. Proceedings of the Fourth London Symposium on Information Theory (Colin Cherry, ed.), pp. 447-457. 1960. | |

| 10. | Iverson, K.E., A Programming Language. New York: Wiley, 1962. | |

| 11. | Graduate Program in Automatic Data Processing (brochure), Harvard University, 1954. | |

| 12. | Iverson, K.E., Graduate Research and Instruction. Proceedings of First Conference on Training Personnel for the Computing Machine Field, Wayne State University, Detroit, Michigan (Arvid W. Jacobson, ed.), pp. 25-29. June 1954. | |

| 13. | Falkoff, A.D., K.E. Iverson, and E. H. Sussenguth, A Formal Description of System/360. IBM Systems Journal, 3(3): 198-263 (1964). | |

| 14. | Iverson, K.E., Formalism in Programming Languages. Communications of the ACM, 7(2): 80-88 (1964) Feb. | |

| 15. | Iverson, K.E., Elementary Functions, Science Research Associates, Chicago, Illinois, 1966. | |

| 16. | Berry, P.C, APL\360 Primer (GH20-0689), IBM Corp., White Plains, New York, 1969. | |

| 17. | Hellerman, H., Experimental Personalized Array Translator System. Communications of the ACM, 7(7): 433-438 (1964) July. | |

| 18. | Wolontis, V.M., A Complete Floating Point Decimal Interpretive System, Tech. Newsl. No. 11, IBM Applied Science Division, 1956. | |

| 19. | Lathwell, R.H., APL Comparison Tolerance. APL 76 Conference Proceedings, pp. 255-258. Association for Computing Machinery, 1976. | |

| 20. | Breed, L.M., Definitions for Fuzzy Floor and Ceiling, Tech. Rep. No. TR03.024, IBM Corp., White Plains, New York, March 1977. | |

| 21. | Falkoff, A.D., and K.E. Iverson, The APL\360 Terminal System. Symposium on Interactive Systems for Experimental Applied Mathematics (M. Klerer and J. Reinfelds, eds.), pp. 22-37. New York: Academic Press, 1968. | |

| 22. | Falkoff, A.D., and K.E. Iverson, APL\360, IBM Corp., White Plains, New York, November 1966. | |

| 23. | Falkoff, A.D., and K.E. Iverson, APL\360 User’s Manual, IBM Corp., White Plains, New York, August 1968. | |

| 24. | Abrams, P.S., An Interpreter for Iverson Notation, Tech. Rep. CS47, Computer Science Department, Stanford University, 1966. | |

| 25. | Breed, L.M., and R.H. Lathwell, The Implementation of APL\360. Symposium on Interactive Systems for Experimental and Applied Mathematics (M. Klerer and J. Reinfelds, eds.), pp. 390-399. New York: Academic Press, 1968. | |

| 26. | Jenkins, M.A., The Solution of Linear Systems of Equations and Linear Least Squares Problems in APL, IBM Tech. Rep. No. 320-2989, 1970. | |

| 27. | Sharp, Ian P., The Future of APL to Benefit from a New File System. Canadian Data Systems, March 1970. | |

| 28. | Breed, L.M., The APL PLUS File System. Proceedings of SHARE XXXV, p. 392. August 1970. | |

| 29. | APL PLUS File Subsystem Instruction Manual, Scientific Time Sharing Corp., Washington, D.C., 1970. | |

| 30. | Lathwell, R.H., System Formulation and APL Shared Variables. IBM Journal of Research and Development, 17(4): 353-359 (1973) July. | |

| 31. | Falkoff, A.D., and K.E. Iverson, APLSV User’s Manual, IBM Corp., White Plains, New York, 1973. | |

| 32. | Falkoff, A.D., Some Implications of Shared Variables. In Formal Languages and Programming (R. Aguilar, ed.), pp. 65-75. Amsterdam: North-Holland Publ., 1976. Reprinted in APL 76 Conference Proceedings, pp. 141-148. Association for Computing Machinery, 1976. | |

| 33. | Beberman, M., and H.E. Vaughn, High School Mathematics Course 1. Indianapolis, Indiana: Heath, 1964. | |

| 34. | Cajori, F., A History of Mathematical Notations, Vol. I, Notations in Elementary Mathematics. La Salle, Illinois: Open Court Publ., 1928. | |

| 35. | Iverson, K.E., Algebra: An Algorithmic Treatment. Reading, Massachusetts: Addison Wesley, 1972. | |

| 36. | Falkoff, A.D., APL75 and APL76: an overview of the Proceedings of the Pisa and Ottawa Congresses. ACM Computing Reviews, 18(4): 139-141 (1977) Apr. | |

| 37. | Weidmann, Clark, APLUM Reference Manual, University of Massachusetts, Amherst, Massachusetts, 1975. | |

| 38. | Sharp APL Tech. Note No.25, I. P. Sharp Associates, Toronto, Canada, 1976. |

Appendix A

Reprinted from APL\360 User’s Manual [23].

The APL language was first defined by K.E. Iverson in A Programming Language (Wiley, 1962) and has since been developed in collaboration with A.D. Falkoff. The APL\360 Terminal System was designed with the additional collaboration of L.M. Breed, who with R.D. Moore*, also designed the S/360 implementation. The system was programmed for S/360 by Breed, Moore, and R.H. Lathwell, with continuing assistance from L.J. Woodrum⍟, and contributions by C.H. Brenner, H.A. Driscoll**, and S.E. Krueger**. The present implementation also benefitted from experience with an earlier version, designed and programmed for the IBM 7090 by Breed and P.S. Abrams⍟⍟.

The development of the system also profited from ideas contributed by many users and colleagues, notably E.E. McDonnell, who suggested the notation for the signum and the circular functions.

In the preparation of the present manual, the authors are indebted to L.M. Breed for many discussions and suggestions; to R.H. Lathwell, E.E. McDonnell, and J.G. Arnold*⍟ for critical reading of successive drafts; and to Mrs. G.K. Sedlmayer and Miss Valerie Gilbert for superior clerical assistance.

A special acknowledgement is due to John L. Lawrence, who provided important support and encouragement during the early development of APL implementation, and who pioneered the application of APL in computer-related instruction.

| * | I.P. Sharp Associates, Toronto, Canada. | |

| ⍟ | General Systems Architecture, IBM Corporation, Poughkeepsie, N.Y. | |

| ** | Science Research Associates, Chicago, Illinois. | |

| ⍟⍟ | Computer Science Department, Stanford University, Stanford, California. | |

| *⍟ | Industry Development, IBM Corporation, White Plains, NY. |

APL Language Summary

APL is a general-purpose programming language with the following characteristics (reprinted from APL Language [4]):

The primitive objects of the language are arrays (lists, tables, lists of tables, etc.). For example, a+b is meaningful for arrays a and b , the size of an array (⍴a) is a primitive function, and arrays may be indexed by arrays as in a[3 1 4 2] .

The syntax is simple: there are only three statement types (name assignment, branch, or neither), there is no function precedence hierarchy, functions have either one, two, or no arguments, and primitive functions and defined functions (programs) are treated alike.

The semantic rules are few: the definitions of primitive functions are independent of the representation of data to which they apply, all scalar functions are extended to other arrays in the same way (that is, item-by-item), and primitive functions have no hidden effects (so-called side-effects).

The sequence control is simple: one statement type embraces all types of branches (conditional, unconditional, computed, etc.), and the termination of the execution of any function always returns control to the point of use.

External communications is establised by means of variables which are shared between APL and other systems or subsystems. These shared variables are treated both syntactically and semantically like other variables. A subclass of shared variables, system variables, provides convenient communication between APL programs and their environment.

The utility of the primitive functions is vastly enhanced by operators which modify their behavior in a systematic manner. For example, reduction (denoted by /) modifies a function to apply over all elements of a list, as in +/l for summation of the items of l . The remaining operators are scan (running totals, running maxima, etc.), the axis operator which, for example, allows reduction and scan to be applied over a specified axis (rows or columns) of a table, the outer product, which produces tables of values as in rate∘.*years for an interest table, and the inner product, a simple generalization of matrix product which is exceedingly useful in data processing and other non-mathematical applications.

The number of primitive functions is small enough that each is represented by a single easily-read and easily-written symbol, yet the set of primitives embraces operations from simple addition to grading (sorting) and formatting. The complete set can be classified as follows:

| Arithmetic: | + - × ÷ * ⍟ ○ | ⌊ ⌈ ! ⌹ | |

| Boolean and Rational: | ∨ ^ ⍱ ⍲ ~ < ≤ = ≥ > ≠ | |

| Selection and Structural: | / \ ⌿ ⍀ [;] ↑ ↓ ⍴ , ⌽ ⍉ ⊖ | |

| General: | ∊ ⍳ ? ⊥ ⊤ ⍋ ⍒ ⍎ ⍕ |

Transcript of Presentation

JAN Lee: For the devotees of the one-liners and APL, I’d now like to present Ken Iverson. Ken received his Bachelor’s Degree from Queen’s University of Kingston, Ontario, Canada, and his Master’s and Ph.D. from Harvard University. In looking through his vita, it is very interesting to me to note his progress and to note that he’s only had two jobs since he graduated. One at Harvard as Assistant Professor of Applied Mathematics, and then he joined IBM in 1960 and has progressed through the ranks since that time. In progressing through the ranks, he was appointed an IBM Fellow in 1971 for his work on APL, I believe. It’s also interesting to note that three years later, having been awarded his Fellowship from IBM, he was then appointed the Manager of the APL Design Group. Ken Iverson.

Kenneth Iverson: Thank you, JAN. They say never quarrel with a newspaper, because they will have the last word, and I suppose the same applies to chairmen. So I won’t even comment on that introduction.

Once launched on a topic it’s very easy to forget to mention the contributions of others, and although I have a very good memory, it is, in the words of one of my colleagues, very short, so I feel I must begin with acknowledgments, and I first want to mention Adin Falkoff, my co-author and colleague of nearly 20 years. In musing on the contributors to APL in the light of Radin’s speculations last night about the relationship between the characteristics of artifacts and the characteristics of the designers, it struck me that we in the APL work tend to remember one another not for the fantastic ideas that we’ve contributed, but for the arrant nonsense that we have fought to keep out. Now, I think there’s really a serious point here; namely, that design really should be concerned largely, not so much with collecting a lot of ideas, but with shaping them with a view of economy and balance. I think a good designer should make more use of Occam’s razor than of the dustbag of a vacuum cleaner, and I thought this was important enough that it would be worthwhile looking for some striking examples of sort of overelaborate design. I was surprised that I didn’t find it all that easy, except perhaps for the designers of programming languages and American automobiles, I think that designers seem to have this feeling, a gut feeling of a need for parsimony.

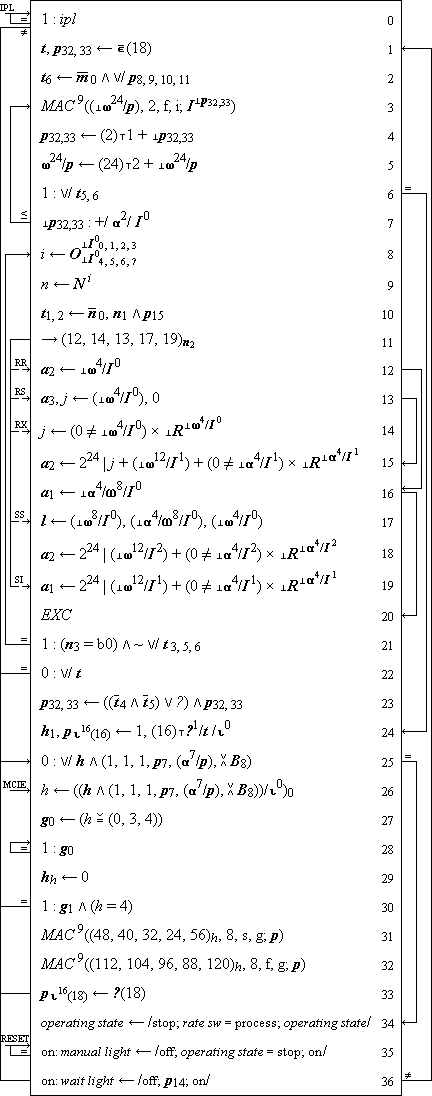

We tried to organize this discussion according to what seemed to be the major phases, and to label these phases with what would seem to be the major preoccupation of the period; and then to try to identify what were the main points of advance in that period, and also ta try to identify what seemed to be the main influences at work at that time [Frame 1].

| |||||||||||||||||||||||

When I say “academic”, this is simply a reflection of the fact that when I began to think about this, it was when I was teaching at Harvard, and my main concern was a need for clear expression, some sort of notation for use in classroom work and in writing. As a matter of fact, at the time I was engaged in trying to write a book together with Fred Brooks, and this was one of the motivations for the development of notation.

But it really was as a tool for communication and exposition that I was thinking of it. What seemed to me the major influence at that time was primarily my mathematical background. I was a renegade from mathematics. There are lots of renegades but many of them didn’t desert as early as I did. [Frame 2] lists the main aspects: the idea of functions with explicit arguments and explicit results, the use of logical functions.

| |||||||||||||||||

Let me just mention one thing. You may have noticed that, for example, relations in APL are not treated separately as assertions, but simply as more logical functions. They are functions, they produce results which you can think of as true or false, but which in fact are represented by ordinary numbers, 1 and 0.

Also, in the treatment of arrays, let me mention — well, the number of concepts from tensor analysis, primarily the idea of simply thinking of the array as the one and only argument in the language and simply treating scalars, vectors, and matrices as special cases which had rank 0, 1, and 2.

The emphasis on identities — by this I mean that we tended to analyze any function to worry about end conditions. Like, what happens in the case of an empty vector and so on? And if you look at this, you’ll see that many of the decisions have really come out as simply making identities hold over as wide a range as possible, and so avoid all kinds of special cases.

Finally, the idea of operators, and let me clarify the terminology. I was assured that everybody in the audience would have read the summary, and therefore this won’t be necessary, but I will say something about it anyway. Commonly, “functions” are referred to indiscriminately as “functions”, “procedures”, “operators”, and so on, and we tend to say that we call a function a function, and reserve the term “operator” for the way it is used in certain branches of mathematics when we speak about a derivative operator, a convolution operator, and so on. The basic notion is that it’s something that applies to a function or functions, and produces a new function. You think of the “slash” in the reduction, for example. It’s an operator that applies to any function to produce a new function. Similarly, the dot in the inner product and so on. And this too, I think, was probably because of the mathematical influence.

| |||||||||||||||||||||||

The next phase, then, was the machine description phase [Frame 3]. I joined IBM in 1960 and shortly thereafter began to work with Adin Falkoff. We turned much more attention to the problem of describing machines themselves. Let me just mention a couple of the things that were sharpened up in this period. One, the order of execution which, if I have time, I will return to a little later, in a different context. And the other was the idea of shared variables. In describing machines one faces very directly the problem of concurrent processes. You have the CPU, you have the channel, so on. They are concurrent processes, and the only thing that makes them a system is the fact that they are able to communicate. And how do they communicate? We chose the idea that any communication has got to take place somehow through the sharing of some medium, some variable. And that is the general notion which has become, in fact, a rather important aspect of later APL.

|

Frame 4. CPU, central processing unit system program.

Let me just give you an example of this machine description [Frame 4]. That is a 37-line description of the CPU of the 360, and although it obviously subordinates detail, by invoking certain other functions which themselves have definitions, it does exhibit I think in a very straightforward way, the essential detail of the CPU.

You would expect that the channel would be a separate process, but you might not expect that to really describe what happens in a control panel clearly, you’ve got to recognize that it’s a concurrent process, and it may be in different states. And so it turned out that even the control panel seemed to lend itself very nicely to a clear description as a separate processor. There are certain variables that it shares with the CPU and possibly with other units.

The third phase was the one of implementation. I think it was about 1964 that we started thinking seriously of implementation, although this had always been in the back of our minds. We are sometimes asked “had we thought about implementation?” The answer is yes and no. We simply took it for granted that a language that’s good for communication [of algorithms] between you and me is also good for communication with a machine, and I still feel the same way about that.The first thing that we really started thinking seriously about was the question of typography. Earlier we had, in effect, a publication form. We didn’t have to worry seriously about the particular symbols used. And once we faced the question of designing an actual implementation, we had to face that question. And I think pretty much following the advice of Herbert Hellerman who was also with IBM at the time, and his interest in time sharing systems, we decided to take the Selectric typing element and design ourselves an 88-character set, and constrain it to that.

Well, this had what to me were surprising implications for the language. It forced us to think seriously about linearizing things. We could no longer afford superscripts, subscripts, and so on, and somewhat to our surprise we found that this really generalized, and in many cases, simplified the language. The problems of addressing more than a two-dimensional array disappeared — you seem to soon run out of corners on a letter. But when you linearize things, you can go to any dimension.

We faced the problem of things like absolute value. We standardize that, and simply say all monadic functions appear to the left and they are represented by a single symbol.

The idea from mathematics where a function may have two valences — the minus sign, for example, can represent either subtraction or negation, and there’s never any confusion because it’s resolved by context. We simply exploited that, and that was one of the ways that we greatly conserved symbols. Thus, x*y for example, is x to the power y and, *Y , as you might guess, is e to the power y , in other words, the exponential.

Faced with only 88 characters you have to have some way of extending them, and I think the first thing that occurred to us was that when we adopted the exclamation mark for the factorial, we didn’t really want to give it space on the keyboard, and recognized we could use a quote symbol and a period. That opened up the idea that is now very familiar, of forming compound characters. And this has given us a large enough number of easily writeable and easily readable characters.

| |||||||||||||||||||||||

Back to the question of the implementation [Frame 5]. In 1965 when we were joined by Larry Breed, who was very much interested in actually producing an implementation, we sort of arrived at two decisions. First of all that it should be experimental. We really were not necessarily aiming at something of practical application, but something that we could experiment with and refine our ideas about the language, and secondly that compromises with the machine, with the representation and so on, should be absolutely minimized. And with those two ideas in mind, Larry Breed and Phil [P.S.] Abrams proposed that we should do this, at the outset at least, as an interpreter. Somewhat surprisingly, I think that all APL implementations have essentially remained that way, and I think not because it’s all that difficult to do compilation, but rather, because of its array nature, it is a language which allows reasonably efficient interpretation. And so there hasn’t been quite the pressure to make a compiler. Also interpreters do have advantages, particularly for interactive use.

The first implementation was on a 7090. We actually had card input, so we had to have transliteration of symbols, AV for absolute value and all that kind of nonsense. But we did have a system running. We could run it and try it out with card input. Then, there became available to us a system called TSM, for Time Sharing Monitor, which allowed us to really use our typing element and a terminal, and so then we had essentially a terminal implementation very much like what you see today — of course, much more limited.

TSM unfortunately remained available to us for a relatively short period, and shortly thereafter, early in 1966 it must have been, we turned attention to an implementation on the 360. And in fact, I might mention that the first interpreter took 2½ people 4½ months, but not as complete as what you see today. The first implementation on the 360 took about the same length of time.

The implementation forced us to think seriously about certain aspects that we had so far been able to ignore: The question of a formal way of making function definitions. Once we faced the question of function definition, then there are questions of name scope. At the outset we had a very simple thing, that names were simply localized. I guess the closest thing would be to say that they were like “own” variables in a function. And then at one point, Larry Breed came back from a talk he attended given by Al Perlis in which Al was talking about what I think he called at the time “dynamic localization” or “dynamic local name scope” and we very quickly adopted that, and got what we have now. Names are localized to the function, but in effect can propagate downwards.

The machine representation of numbers raised questions about comparisons. For example, when I say Y is 2 divided by 3, and then try and compare 2 with Y times 3, do I want it to agree or not? Well, we wanted the mathematics to behave the way you thought it did in high school, more or less, and so we introduced this notion of what we then called “fuzz” but we now more respectably call a “comparison tolerance” and that, too, I think has become a very very important element of APL.

Finally, the idea of what we called the workspace. Most languages of the time, and perhaps still, tend to give you a library of functions. There are your tools. And your data is someplace else. So we thought it more convenient to work in terms of what we called eventually a “workspace”, which was, if you like, a place where you have your tools and your materials. And this, too, has proved a very nice concept which has survived in all the later implementations.

| |||||||||||||

I guess it was probably about 1968, when we got this system running and pretty well cleaned up, that we began to think more seriously about some very serious limitations of that system, effectively the absence of files or more generally, the absence of communication with the outside world, with outside resources. And what was chosen was the notion of shared variables [Frame 6]. In other words, to get our communication with the outside world in effect by allowing an APL user to offer to share a variable with some other processor. It might be a processor written in APL. It might be a file subsystem. It might be anything. And this in fact was an idea which, as I said, was implicit in some of our earlier work, but I think it was primarily Dick [R.H.] Lathwell who pressed this idea and saw it as a general solution to a lot of these problems, and that too has become, I think, a very important aspect in present APL systems. Certainly as a practical matter it’s been very important.

And it also allowed us to systematize or rationalize some ideas in the language. We had, in the case of some functions like indexing, the idea of index origin. You could set the system to use 0-origin indexing or 1-origin indexing. And this essentially allowed us to rationalize that by recognizing that here was a variable that we really wanted to communicate to the APL system itself. This is what we call a system variable, a variable that is in effect shared with the interpreter itself, so that we now handle not only index origin but a host of other things like this, printing width, printing precision and so on; by simply treating them as variables that are shared with the system.

System functions are related to that in the following way. There are certain variables to which you might like to have a programmer have access, such as, for example, some information from the symbol table — but even for APL programmers it would be dangerous to give them complete access to the symbol table and allow them to respecify it, for example. And so we gave a limited form of access to variables like that through what we called “system functions”.

| |||||||||||||||||

In talking about the development of all programming languages, not just APL, I think we tend to make a mistake in thinking of them as starting, ab initio, in the 1940s when machines first came along. And I think that that is a very serious mistake because, in fact, the essential notions are notions that were worked out in mathematics, and in related disciplines, over centuries [Frame 7]. I mean, just the idea of a formula, the idea of a procedure — although in mathematics we never had a really nice formal way of specifying this — it’s clear that this is what we do. In fact, in a lecture, you sort of give the kernel of the thing and then you wave your arms and that replaces the looping and what-not. But the idea of algorithms and so on is very central to mathematics. And so I think it’s a mistake to think of the development as starting from the computer. I think it’s worthwhile trying to examine this in the following sense: if we look at mathematical notation, say, at the time of the development of the computer we had some very important notations. If you don’t think they’re important, I would recommend strongly that you take a look at some source like [Florian] Cajori’s History of Mathematical Notations; it’s just incredible the time and effort that went into introducing what we now just take as obvious. For example, the idea of a variable name: a very painful thing. At the outset you wrote calculations in terms of specific quantities. Then it was recognized that you really wanted to make some sort of a universal statement and so they actually wrote out things like “universalis” which, because we are all lazy, gradually got abbreviated, and I suppose by the time it reached u, somebody said, “Aha! We’ve got a lot of letters like that. And now we can talk about several universals,” and so on.

But the serious thing is that this is a very important notion. The idea of identifying certain important functions like addition, multiplication, and assigning symbols to them. These symbols are not as old as you think. Take the times sign for example. It’s only about 200 years old; it’s too young to die. That’s why we resist the things like the asterisk and so on, that happen to be on tabulating machines because of the need for check protection.

There were other things like grouping. It took a long time for what seems now the obviously beautiful way of showing grouping in an expression by parentheses. It was almost superseded by what is called the “vinculum” — where you drew a line over the top of the subexpression — linearization again. I think the main reason that the parentheses won out was because when you have what would now just be a series of parentheses you got lines going up higher and higher on the page, and that was awkward for printing.

The question of representation — certainly mathematics had long since reached the stage where you don’t think about how the numbers are represented. When you think about multiplication, division, and so on, you don’t think, “Ah — that’s got to be carried out in roman numeral, or in arabic, or in base-2” or anything like that. You simply think of it as multiplication. Representation is subordinated.

And of course, the idea of arrays has been in mathematical notation and other applications for something like 150 years. They still haven’t really been recognized, but they’ve been there.

Now, what happened when the first computer came along? For perfectly good practical reasons every one of these notions disappeared. You couldn’t even say “A + B”. You had to say, “Load A into accumulator; load B into so-and-so” — you couldn’t even say that. You had to say, “Load register 800 into the so-and-so and so on.” So all of these things disappeared, for perfectly practical reasons. The sad thing is that 30 years later we’re still following that same aberration, and this is where I think it’s interesting to look at APL [Frame 7]. But there are many more similarities than you might think; if you look in the paper, there are some of these things pointed out. One of these is the order of execution. Let me just take one second for that. People in programming seem to think that APL has a strange order of execution. The order of execution in APL is very simply this: every function takes as its right hand argument the value of an entire expression to the right of it. This came straight from expression in mathematics like F of G of H of X. And all we did in APL was to recognize that this was a really nice scheme, and let it extend to functions of two arguments as well. This has some very nice consequences. But it came from mathematics.

Now, let me say one last word of a more general character about the influences.

In our earlier paper on the design of APL [Reference 1 of Paper], Falkoff and I tried to figure out (in retrospect, obviously) what really were the main principles that had guided us. And we concluded that there were really just two: one was simplicity, and the other was practicality. Now, I expect that every one of the other participants in the APL work could cite some sort of important influence that led him to this kind of bias, but I would like to mention where I’m sure that mine came from. I’m sure it came from studying under Professor [Howard] Aiken [of Harvard]. This is a name that I think occurs much less prominently than it should in the history of computing. And Aiken was raised as an engineer. Although he had a very theoretical turn of mind, he always spoke of himself as an engineer. And an engineer he characterized as being a man who could build for one buck what any damn fool could do for ten. He also emphasized simplicity, and one of the most striking things that I think all of his graduate students remember was, when faced with the usual sort of enthusiastic presentation of something, he would say, “I’m a simple man. I don’t understand that. Could you try to make it clear?” And this is a most effective way of dealing with graduate students. I know it had a decided impact on me.

Finally, he had some more general pieces of advice,

which I found always useful.

Let me just cite one of them.

I don’t know what the occasion was,

but one of his graduate students

was becoming a little paranoid

that somebody might steal one of the fantastic ideas

from his thesis, and so he was stamping every copy

“Confidential”.

Aiken just shook his head and said,

“Don’t worry about people stealing your ideas.

If they’re any good,

you’ll have to ram them down their throats!”

Transcript of Discussant’s Remarks

JAN Lee: Thank you, Ken. Our discussant for the APL paper is one of those gentlemen at whom all faculty members look with some awe. This is the student who made good! The one who was your assistant at one time, and then eventually you discover that he becomes the full professor! So I’d like to introduce Fred Brooks. Fred is the Professor and Chairman of the Computer Science Department at the University of North Carolina. He is probably best known as the father of System/360, having served as the Project Manager for its development and later as the Manager of the Operating System/360 Software Project. You can’t blame him for that, though. Now, in the later years, Fred was responsible for what has become known as the one-language concept, which we alluded to in the PL/I session earlier on this morning, and he is going to comment on that and on APL.

Frederick Brooks: In introduction, I’d like to say a word about the conference that I think should appropriately come from a part of the APL community — and that is, the thing I like best about this conference is its character set! I cannot recall a time since the 1976 Los Alamos machine history conference [International Research Conference on the History of Computing] when such a set of characters has been gathered under one roof.

I think one of the themes of the conference — very illuminating — is to study the processes by which languages are not only designed, but by which their adoption is accomplished. If you look back over the papers, you see a sharp dichotomy between what one can call the “committee” languages. The papers about those almost completely concern themselves with process; and the author languages, and the papers about those have almost completely concerned themselves with technical issues. I think there’s a lesson there.

I myself have not ever been a major participant in the design of a high-level language, but I have been midwife at two: APL and PL/I. And I would like to remark that there is a class of programming languages which have not been discussed here at all, and that’s appropriate. But these are the languages which are intended for direct interpretation by machine hardware. They’re called “machine” languages, and Gerry Blaauw and I are working on an approach to computer architecture that treats that as a problem in programming language design where the utterances are costly.

Today I’d like to remark on two things that I can perhaps illuminate about the APL history. One is what Ken calls the “academic” period at Harvard; and the other is the third, the implementation period at IBM.